How to Extract Data From a Website: A Comprehensive Guide

In today’s data-driven world, the ability to extract data from a website is a crucial skill for businesses, researchers, and individuals alike. Whether you’re analyzing market trends, gathering competitive intelligence, or building a custom application, web scraping offers a powerful way to access and utilize the vast amounts of information available online. This guide provides a comprehensive overview of the methods, tools, and ethical considerations involved in extracting data from websites.

Understanding Web Scraping

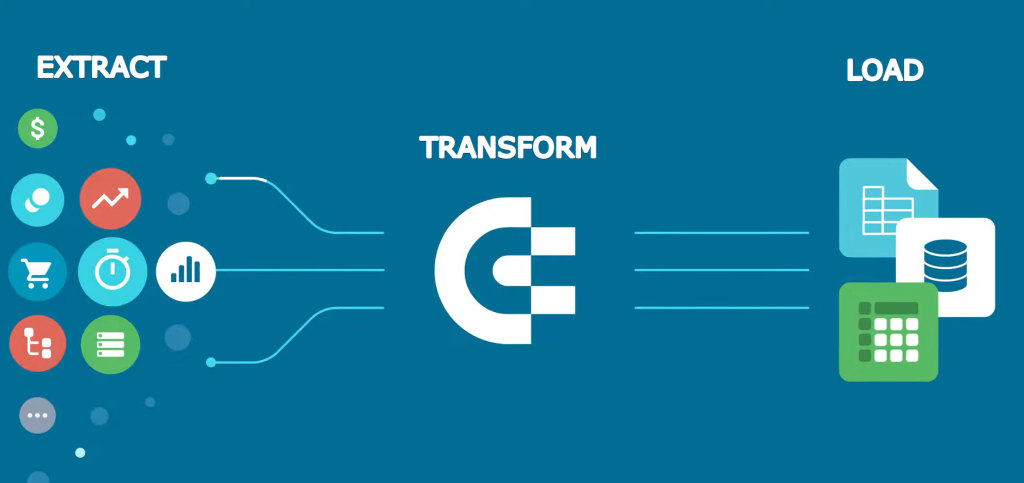

Web scraping, also known as web harvesting or web data extraction, is the process of automatically collecting data from websites. It involves using software or scripts to access a website’s HTML code, identify the desired data, and then extract and store that data in a structured format, such as a CSV file, a database, or an Excel spreadsheet.

Why Extract Data From a Website?

There are numerous reasons why someone might want to extract data from a website. Some common use cases include:

- Market Research: Gathering data on pricing, product features, and customer reviews to analyze market trends and identify opportunities.

- Competitive Intelligence: Monitoring competitor websites to track their pricing, promotions, and product offerings.

- Lead Generation: Extracting contact information from websites to build a database of potential leads.

- Content Aggregation: Collecting news articles, blog posts, or other content from various sources to create a centralized platform.

- Data Analysis: Gathering data for research purposes, such as analyzing social media trends or tracking stock prices.

- Building Applications: Using web-scraped data to populate databases or power custom applications.

Methods for Extracting Data

There are several methods for extracting data from websites, each with its own advantages and disadvantages:

Manual Copy-Pasting

The simplest method is to manually copy and paste data from a website into a spreadsheet or text file. While this method is suitable for small amounts of data, it’s time-consuming and impractical for larger projects. It also lacks scalability and is prone to human error.

Using Browser Extensions

Several browser extensions, such as Web Scraper and Data Miner, allow you to visually select and extract data from a website directly within your browser. These extensions are generally user-friendly and require no coding knowledge. However, they may have limitations in terms of the complexity of the data they can extract and the number of pages they can scrape.

Utilizing Web Scraping Tools

Web scraping tools, such as ParseHub, Octoparse, and import.io, are dedicated software applications that provide a more robust and flexible way to extract data from websites. These tools typically offer features such as visual point-and-click interfaces, scheduling capabilities, and the ability to handle complex website structures. They often come with free and paid plans, depending on the features and usage limits.

Writing Custom Code

For more complex web scraping tasks, writing custom code using programming languages like Python with libraries such as Beautiful Soup and Scrapy offers the greatest flexibility and control. This method requires programming knowledge but allows you to handle complex website structures, dynamic content, and authentication requirements. It also allows for greater scalability and customization. You can really tailor how you extract data from a website with this method.

Tools and Technologies

Several tools and technologies are commonly used for web scraping:

- Python: A popular programming language for web scraping due to its ease of use and extensive libraries.

- Beautiful Soup: A Python library for parsing HTML and XML documents, making it easy to navigate and extract data.

- Scrapy: A powerful Python framework for building web scraping spiders.

- Selenium: A tool for automating web browsers, often used for scraping dynamic websites that rely heavily on JavaScript.

- XPath: A query language for selecting nodes in an XML or HTML document.

- CSS Selectors: Patterns used to select HTML elements based on their CSS classes and IDs.

Ethical Considerations and Legal Issues

While web scraping can be a powerful tool, it’s essential to consider the ethical and legal implications. Some key considerations include:

Respecting Website Terms of Service

Always review a website’s terms of service before scraping it. Many websites explicitly prohibit web scraping, and violating these terms can have legal consequences. If the terms of service forbid web scraping, it’s best to refrain from extracting data from the website.

Avoiding Overloading Servers

Web scraping can put a strain on a website’s servers. To avoid overloading them, implement rate limiting, which involves adding delays between requests to prevent the scraper from sending too many requests in a short period. Also, consider scraping during off-peak hours to minimize the impact on the website’s performance.

Respecting Robots.txt

The robots.txt file is a text file that websites use to instruct web crawlers and bots about which parts of the site should not be accessed. Always check the robots.txt file before scraping a website and respect its directives. Ignoring the robots.txt file is generally considered unethical and can lead to legal issues. This file gives clues about which data is sensitive, and which data you should not extract data from the website.

Protecting Personal Data

Be mindful of personal data and privacy regulations, such as GDPR and CCPA. Avoid scraping personal information without consent and ensure that you comply with all applicable privacy laws.

Copyright and Intellectual Property

Be aware of copyright laws and intellectual property rights. Do not scrape copyrighted content without permission or use scraped data in a way that infringes on someone else’s intellectual property.

A Practical Example: Extracting Product Prices from an E-commerce Website

Let’s illustrate how to extract data from a website using Python with Beautiful Soup. In this example, we’ll extract product prices from a hypothetical e-commerce website.

import requests

from bs4 import BeautifulSoup

url = 'https://www.example-ecommerce-site.com/products'

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

products = soup.find_all('div', class_='product')

for product in products:

name = product.find('h2', class_='product-name').text

price = product.find('span', class_='product-price').text

print(f'Product: {name}, Price: {price}')

This code snippet first sends an HTTP request to the website. Then, it uses Beautiful Soup to parse the HTML content and find all the elements with the class ‘product’. Finally, it iterates through each product element and extracts the name and price using the corresponding CSS classes.

Advanced Techniques

For more complex web scraping scenarios, consider using the following advanced techniques:

Handling Dynamic Content

Websites that heavily rely on JavaScript to load content dynamically require a different approach. Selenium can be used to automate a web browser and render the JavaScript, allowing you to scrape the dynamically loaded content. Selenium essentially mimics a human user interacting with the website, making it possible to extract data from the website that would otherwise be hidden.

Dealing with Pagination

Many websites display data across multiple pages. To scrape all the data, you need to handle pagination. This involves identifying the pattern in the URLs for each page and iterating through them, scraping the data from each page. This can be done using a loop and updating the URL for each iteration.

Using Proxies

To avoid being blocked by a website, you can use proxies to rotate your IP address. This involves routing your requests through different proxy servers, making it harder for the website to identify and block your scraper. There are both free and paid proxy services available.

Solving CAPTCHAs

Some websites use CAPTCHAs to prevent automated scraping. While it’s generally considered unethical to bypass CAPTCHAs, there are services that can automatically solve them for you. However, it’s important to use these services responsibly and avoid scraping websites that explicitly prohibit it. Be sure you have permission to extract data from the website if it uses CAPTCHAs.

Conclusion

Extracting data from a website can be a valuable skill for various purposes. By understanding the different methods, tools, and ethical considerations involved, you can effectively and responsibly gather the data you need. Remember to always respect website terms of service, avoid overloading servers, and comply with privacy regulations. Whether you’re using browser extensions, web scraping tools, or writing custom code, the key is to approach web scraping with a clear understanding of your goals and a commitment to ethical practices. Always ensure you have the right to extract data from the website before beginning your project. Consider also [See also: Legal Aspects of Web Scraping] and [See also: Best Web Scraping Tools for Beginners].