How to Import Data from a Website to Excel: A Comprehensive Guide

In today’s data-driven world, the ability to efficiently extract and analyze information from various sources is crucial. Microsoft Excel, a powerful spreadsheet program, offers several methods to import data from a website to Excel, enabling users to transform raw web data into actionable insights. This guide provides a comprehensive overview of these techniques, catering to both beginners and advanced users.

Why Import Data from a Website to Excel?

Before diving into the how-to, let’s address the ‘why.’ Importing data from websites into Excel offers numerous benefits:

- Data Consolidation: Aggregate information from multiple online sources into a single, manageable spreadsheet.

- Analysis and Reporting: Leverage Excel’s powerful analytical tools to identify trends, patterns, and correlations within web data.

- Automation: Automate the data extraction process to save time and effort, ensuring your data is always up-to-date.

- Customization: Manipulate and format web data to meet specific reporting and analytical needs.

- Real-time Updates: Some methods allow for dynamic updates, keeping your Excel sheet synchronized with changes on the website.

Methods to Import Data from a Website to Excel

Several methods exist to import data from a website to Excel, each with its strengths and limitations. Choosing the right method depends on the website’s structure, the type of data, and your technical expertise.

Using Excel’s Built-in ‘Get & Transform Data’ (Power Query) Feature

Excel’s Power Query, accessible through the ‘Data’ tab under ‘Get & Transform Data’, is a robust tool for importing and transforming data from various sources, including websites.

- Open Excel and Navigate to the ‘Data’ Tab: Create a new Excel workbook or open an existing one.

- Select ‘From Web’: In the ‘Get & Transform Data’ group, click ‘From Web’. A dialog box will appear.

- Enter the Website URL: Paste the URL of the website containing the data you want to import. Click ‘OK’.

- Choose the Data: Excel will attempt to identify tables on the webpage. A ‘Navigator’ window will display a list of available tables and the entire webpage document. Preview each table to find the one containing the desired data.

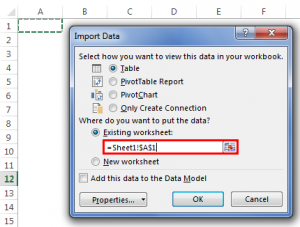

- Load or Transform Data:

- Load: If the data is clean and ready to use, click ‘Load’ to directly import it into your Excel sheet.

- Transform Data: If the data requires cleaning or transformation, click ‘Transform Data’ to open the Power Query Editor.

- Power Query Editor (Optional): The Power Query Editor allows you to clean, transform, and reshape your data. You can perform operations such as:

- Removing unnecessary columns.

- Filtering rows based on specific criteria.

- Changing data types (e.g., text to number).

- Splitting columns.

- Merging columns.

- Close & Load: Once you’ve completed your transformations, click ‘Close & Load’ to import the transformed data into your Excel sheet.

This method is particularly effective for websites with well-structured HTML tables. Power Query automatically detects and extracts the table data, making the process relatively straightforward. It allows you to import data from a website to Excel very easily.

Using Web Queries (.iqy Files)

Web Queries provide a more traditional approach to importing data from websites. While Power Query is generally preferred, Web Queries can still be useful in certain situations.

- Create a New Text File: Open a text editor (e.g., Notepad) and save the file with a ‘.iqy’ extension (e.g., ‘data.iqy’).

- Add the Web Query Code: Enter the following code into the text file, replacing ‘YOUR_WEBSITE_URL’ with the actual URL:

WEB YOUR_WEBSITE_URL

- Save the .iqy File: Save the file in a location you can easily access.

- Open Excel and Navigate to the ‘Data’ Tab: As before, open Excel and go to the ‘Data’ tab.

- Select ‘From Text/CSV’: In the ‘Get & Transform Data’ group, select ‘From Text/CSV’.

- Choose the .iqy File: Browse to the location where you saved the .iqy file and select it.

- Import the Data: Excel will attempt to import the data based on the Web Query. You may need to adjust the import settings (e.g., delimiter) to correctly parse the data.

Web Queries are simpler than Power Query but offer less flexibility in data transformation. This method is useful when you need to import data from a website to Excel very quickly and don’t require extensive data manipulation.

Using VBA (Visual Basic for Applications)

For more complex scenarios or when you need to automate the data extraction process significantly, VBA provides a powerful solution. VBA allows you to write custom code to access web pages, parse HTML, and extract specific data elements.

- Open the VBA Editor: In Excel, press ‘Alt + F11’ to open the VBA editor.

- Insert a New Module: In the VBA editor, go to ‘Insert’ -> ‘Module’.

- Write the VBA Code: Write VBA code to:

- Create an XMLHTTP object to send an HTTP request to the website.

- Retrieve the HTML content of the webpage.

- Parse the HTML using an HTML parser (e.g., MSHTML).

- Extract the desired data elements.

- Write the extracted data to your Excel sheet.

- Run the Code: Run the VBA code to extract and import the data.

Using VBA requires programming knowledge but offers the greatest control and flexibility. It’s ideal for situations where you need to import data from a website to Excel with complex structures or when you need to perform sophisticated data manipulation. This is a more advanced method to import data from a website to Excel.

Here’s a basic example VBA code snippet:

Sub ImportDataFromWeb()

Dim XMLPage As Object

Dim HTMLDoc As Object

Dim Table As Object

Dim TR As Object, TD As Object

Dim i As Long, j As Long

Dim URL As String

URL = "YOUR_WEBSITE_URL"

Set XMLPage = CreateObject("MSXML2.XMLHTTP.6.0")

XMLPage.Open "GET", URL, False

XMLPage.send

Set HTMLDoc = CreateObject("HTMLFile")

HTMLDoc.body.innerHTML = XMLPage.responseText

Set Table = HTMLDoc.getElementsByTagName("table")(0) ' Assuming the first table

i = 1

For Each TR In Table.getElementsByTagName("tr")

j = 1

For Each TD In TR.getElementsByTagName("td")

Sheet1.Cells(i, j).Value = TD.innerText

j = j + 1

Next TD

i = i + 1

Next TR

Set XMLPage = Nothing

Set HTMLDoc = Nothing

Set Table = Nothing

Set TR = Nothing

Set TD = Nothing

End Sub

Disclaimer: This is a simplified example. You’ll likely need to adapt the code based on the specific structure of the website you’re scraping. Websites often change their structure, which can break your VBA code. Always ensure you’re complying with the website’s terms of service regarding data scraping.

Using Third-Party Tools

Several third-party tools are designed specifically for web scraping and data extraction. These tools often provide a user-friendly interface and advanced features, such as automatic data detection, scheduling, and data transformation.

Examples of popular web scraping tools include:

- ParseHub

- WebHarvy

- Octoparse

- Import.io

These tools typically offer a visual interface for selecting data elements and defining extraction rules. They can be a good option if you need to import data from a website to Excel regularly and don’t want to write code.

Best Practices for Importing Data from Websites

Regardless of the method you choose, consider these best practices:

- Respect Website Terms of Service: Always review the website’s terms of service to ensure you’re allowed to scrape data. Some websites explicitly prohibit scraping.

- Handle Data Responsibly: Be mindful of the amount of data you’re extracting and avoid overwhelming the website’s servers. Implement delays in your code to prevent overloading the server.

- Error Handling: Implement error handling in your code to gracefully handle situations where data is missing or the website structure changes.

- Data Validation: After importing the data, validate it to ensure its accuracy and completeness.

- Schedule Regular Updates: If you need to keep your data up-to-date, schedule regular updates using Power Query’s refresh feature or VBA’s scheduling capabilities.

Troubleshooting Common Issues

You may encounter issues when importing data from websites. Here are some common problems and potential solutions:

- Website Structure Changes: If the website’s structure changes, your Power Query or VBA code may break. You’ll need to update your queries or code to reflect the new structure.

- Website Requires Authentication: If the website requires a login, you’ll need to provide authentication credentials in your Power Query settings or VBA code.

- Data is Dynamically Loaded: If the data is dynamically loaded using JavaScript, Power Query may not be able to extract it directly. You may need to use a headless browser (e.g., Puppeteer, Selenium) in conjunction with VBA to render the JavaScript and extract the data.

- Rate Limiting: Some websites implement rate limiting to prevent excessive scraping. If you encounter rate limiting, you’ll need to slow down your data extraction process.

Conclusion

Importing data from websites into Excel empowers you to analyze and leverage online information effectively. Whether you choose Power Query, Web Queries, VBA, or third-party tools, understanding the strengths and limitations of each method is crucial. By following the best practices outlined in this guide, you can streamline your data extraction process and unlock valuable insights from the web. Mastering the ability to import data from a website to Excel is a valuable skill in today’s data-driven world. Remember to always respect website terms of service and handle data responsibly. [See also: How to Automate Excel Tasks with VBA] [See also: Best Excel Functions for Data Analysis]