How to Extract Data from Website to Excel: A Comprehensive Guide

In today’s data-driven world, the ability to efficiently extract data from websites and analyze it in a familiar environment like Microsoft Excel is a crucial skill. Whether you’re a market researcher, a business analyst, or simply someone who needs to gather information quickly, understanding how to extract data from website to Excel can save you countless hours of manual data entry and open up new avenues for insights. This comprehensive guide will explore various methods, from simple copy-pasting to advanced web scraping techniques, empowering you to choose the best approach for your specific needs.

Why Extract Data from Websites to Excel?

Before diving into the ‘how,’ let’s consider the ‘why.’ Extracting data from websites to Excel offers numerous benefits:

- Efficiency: Automate the process of gathering data, eliminating manual data entry and saving time.

- Accuracy: Reduce the risk of human error associated with manual data entry.

- Analysis: Leverage Excel’s powerful analytical tools to identify trends, patterns, and insights.

- Reporting: Create customized reports and visualizations to communicate your findings effectively.

- Competitive Advantage: Gain insights into competitor pricing, product offerings, and market trends.

Methods for Extracting Data from Websites to Excel

Several methods exist for extracting data from website to Excel, each with its own strengths and weaknesses. The best approach depends on the complexity of the data, the structure of the website, and your technical skills.

Copy-Pasting: The Simplest Approach

The most basic method involves manually copying data from a website and pasting it into an Excel spreadsheet. While simple, this approach is only suitable for small amounts of data that are easily accessible and consistently formatted on the webpage. It’s prone to errors and incredibly time-consuming for larger datasets. If the website changes its layout, you’ll have to redo the entire process.

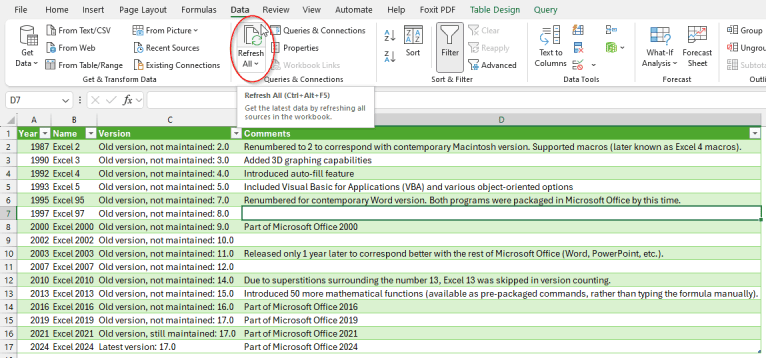

Excel’s Built-in Web Query Feature

Excel offers a built-in web query feature that allows you to import data from tables or preformatted data structures directly from a website. This is a more efficient option than copy-pasting, but it still has limitations.

- Open Excel: Create a new worksheet or open an existing one.

- Go to the Data Tab: Click on the ‘Data’ tab in the Excel ribbon.

- Get External Data: In the ‘Get & Transform Data’ group, click ‘From Web.’

- Enter the URL: Enter the URL of the webpage containing the data you want to extract.

- Select the Table: Excel will display a list of tables found on the webpage. Select the table containing the desired data.

- Load the Data: Click ‘Load’ to import the data into your Excel spreadsheet.

This method works best when the data is presented in a well-defined HTML table. However, many websites use more complex layouts, making it difficult for Excel to identify and extract the data accurately. You might need to use the ‘Edit’ feature within the ‘From Web’ option to refine the data extraction process using Power Query Editor.

Power Query (Get & Transform Data)

Power Query, also known as Get & Transform Data, is a powerful data transformation and extraction tool built into Excel. It allows you to connect to various data sources, including websites, and clean, transform, and load the data into your spreadsheet. Power Query is significantly more robust than the basic web query feature.

Using Power Query, you can:

- Connect to web pages using URLs.

- Navigate through the HTML structure to locate specific data elements.

- Filter and transform the data to meet your needs.

- Combine data from multiple sources.

- Automate the data extraction process.

To use Power Query for extracting data from website to Excel:

- Open Excel: Create a new worksheet or open an existing one.

- Go to the Data Tab: Click on the ‘Data’ tab in the Excel ribbon.

- Get External Data: In the ‘Get & Transform Data’ group, click ‘From Web.’

- Enter the URL: Enter the URL of the webpage containing the data you want to extract.

- Navigate the Web Page: Use the Navigator window to explore the structure of the web page. You can often drill down into specific tables or lists.

- Transform the Data: Use the Power Query Editor to clean, transform, and filter the data as needed. Common transformations include removing unwanted columns, changing data types, and filtering rows.

- Load the Data: Click ‘Close & Load’ to import the transformed data into your Excel spreadsheet.

Power Query is a versatile tool that can handle more complex website structures than the basic web query feature. It requires some learning, but the benefits in terms of data extraction efficiency and flexibility are significant. [See also: Power Query Best Practices]

Web Scraping with Programming Languages (Python)

For complex websites or when you need to extract data on a regular basis, web scraping with a programming language like Python is often the best solution. Web scraping involves writing code to automatically extract data from websites. Python offers several powerful libraries for web scraping, including:

- Beautiful Soup: A library for parsing HTML and XML documents. It makes it easy to navigate the structure of a web page and extract specific data elements.

- Scrapy: A powerful web scraping framework that provides a structured approach to building web scrapers. It’s ideal for complex scraping projects.

- Requests: A library for making HTTP requests. It allows you to download the HTML content of a web page.

Here’s a basic example of how to extract data from website to Excel using Python, Beautiful Soup, and Pandas (for exporting to Excel):

import requests

from bs4 import BeautifulSoup

import pandas as pd

# URL of the website to scrape

url = "https://example.com/data-table"

# Send an HTTP request to the website

response = requests.get(url)

# Parse the HTML content

soup = BeautifulSoup(response.content, 'html.parser')

# Find the table containing the data

table = soup.find('table')

# Extract the data from the table rows

data = []

for row in table.find_all('tr'):

columns = row.find_all('td')

if columns:

data.append([column.text.strip() for column in columns])

# Create a Pandas DataFrame from the data

df = pd.DataFrame(data)

# Export the DataFrame to an Excel file

df.to_excel('data.xlsx', index=False)

print("Data extracted and saved to data.xlsx")

This code snippet demonstrates a simple web scraping example. You’ll need to adapt it to the specific structure of the website you’re scraping. Web scraping requires programming skills and a good understanding of HTML and CSS. It’s a powerful technique, but it’s important to be aware of the legal and ethical considerations associated with web scraping. [See also: Ethical Web Scraping Guidelines]

Web Scraping Tools (No-Code Solutions)

If you’re not comfortable with programming, several no-code web scraping tools are available. These tools provide a visual interface for selecting the data you want to extract and configuring the scraping process. Some popular web scraping tools include:

- Octoparse: A powerful and user-friendly web scraping tool that allows you to extract data from complex websites without writing any code.

- ParseHub: A visual web scraping tool that allows you to extract data from dynamic websites that use AJAX and JavaScript.

- WebHarvy: A point-and-click web scraping tool that’s easy to use and offers a wide range of features.

These tools often offer features such as:

- Visual point-and-click interface for selecting data.

- Support for pagination and infinite scrolling.

- Scheduled scraping.

- Data cleaning and transformation.

- Export to Excel, CSV, and other formats.

Legal and Ethical Considerations

Before extracting data from website to Excel, it’s crucial to consider the legal and ethical implications. Always review the website’s terms of service and robots.txt file to understand the website’s policies on web scraping. Avoid overloading the website’s servers with excessive requests, and respect any restrictions on the use of the extracted data.

Choosing the Right Method

The best method for extracting data from website to Excel depends on several factors:

- Data Volume: For small amounts of data, copy-pasting or Excel’s built-in web query feature may suffice. For larger datasets, Power Query or web scraping are more efficient.

- Website Complexity: Simple websites with well-structured data can be scraped using Excel’s web query feature or Power Query. Complex websites may require web scraping with Python or a no-code web scraping tool.

- Technical Skills: If you have programming skills, web scraping with Python offers the most flexibility and control. If you’re not comfortable with programming, Power Query or a no-code web scraping tool may be a better option.

- Frequency of Extraction: If you need to extract data on a regular basis, automating the process with Power Query or web scraping is essential.

Conclusion

Extracting data from website to Excel is a valuable skill that can save you time and effort while providing valuable insights. By understanding the different methods available and their respective strengths and weaknesses, you can choose the best approach for your specific needs. From simple copy-pasting to advanced web scraping techniques, the possibilities are endless. Remember to always consider the legal and ethical implications before scraping any website. With the right tools and techniques, you can unlock the power of web data and transform it into actionable insights. Whether you choose to use Excel’s built-in tools, delve into the world of Python web scraping, or leverage no-code solutions, mastering the art of extracting data from website to Excel will undoubtedly enhance your data analysis capabilities. Consider exploring different options and practicing to find the method that best suits your skills and requirements. Regularly updating your knowledge of web scraping techniques and available tools will also ensure you stay efficient and effective in your data extraction endeavors. Happy scraping!