Unlocking the Web’s Data: A Comprehensive Guide to Page Scrapers

In today’s data-driven world, the ability to extract information from websites is a crucial skill. This is where page scrapers come in. A page scraper, at its core, is a tool designed to automatically extract data from websites. From e-commerce product details to news articles and social media updates, page scrapers can efficiently collect and organize vast amounts of online information. This article provides a comprehensive overview of page scrapers, exploring their functionality, applications, ethical considerations, and the different types available. Understanding how page scrapers work and their proper usage is essential for anyone looking to leverage web data for business intelligence, research, or personal projects. This exploration will provide a clear understanding of what page scrapers are and how to utilize them effectively and ethically.

What is a Page Scraper?

A page scraper, also known as a web scraper, is a software program or script that extracts data from websites. It automates the process of browsing a website, identifying specific data points, and saving that data in a structured format, such as a CSV file, JSON, or a database. Unlike manual data extraction, which can be time-consuming and error-prone, page scrapers offer a fast, efficient, and reliable way to gather information from the web. They are invaluable tools for researchers, marketers, and businesses looking to gain insights from online data.

How Page Scrapers Work

The process of web scraping typically involves the following steps:

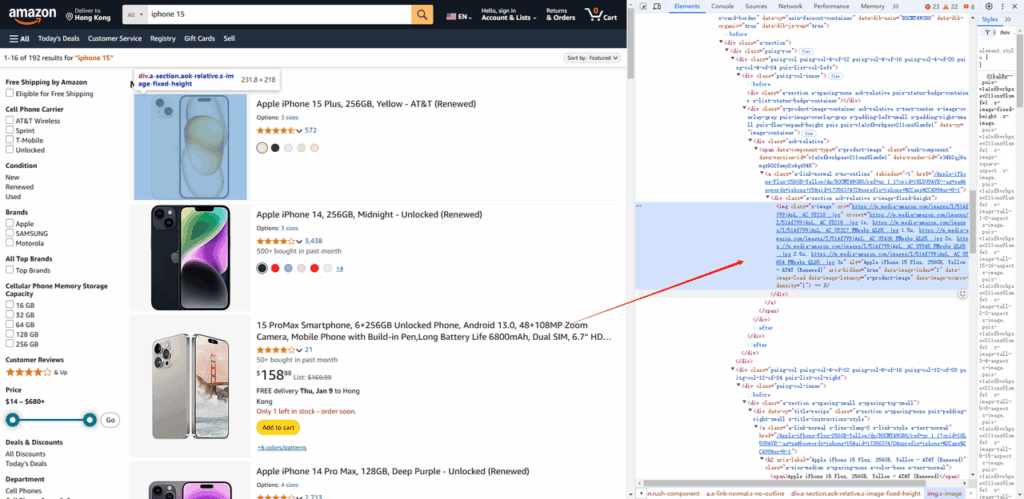

- Requesting the Web Page: The page scraper sends an HTTP request to the target website, similar to how a web browser requests a page.

- Parsing the HTML: Once the server responds with the HTML content of the page, the page scraper parses the HTML code to understand its structure.

- Locating the Data: Using techniques like CSS selectors, XPath expressions, or regular expressions, the page scraper identifies the specific data elements to extract. These elements could be text, images, links, or any other content on the page.

- Extracting the Data: The page scraper extracts the identified data from the HTML structure.

- Storing the Data: Finally, the extracted data is stored in a structured format, such as a CSV file, JSON file, or a database, for further analysis or use.

Types of Page Scrapers

Page scrapers come in various forms, each with its own strengths and weaknesses. Here are some common types:

Browser Extensions

Browser extensions, such as Web Scraper or Data Miner, are easy-to-use tools that allow you to scrape data directly from your web browser. They are often suitable for simple scraping tasks and don’t require programming knowledge. However, they may be limited in terms of scalability and complex scraping scenarios.

Desktop Software

Desktop software solutions, like Octoparse or ParseHub, offer more advanced features and capabilities compared to browser extensions. They typically provide a visual interface for designing scraping workflows and can handle more complex website structures. These tools often include features like scheduling, IP rotation, and data cleaning.

Cloud-Based Scraping Services

Cloud-based scraping services, such as Apify or Scrapinghub, provide a scalable and reliable infrastructure for web scraping. They handle the complexities of managing proxies, handling CAPTCHAs, and dealing with anti-scraping measures. These services are ideal for large-scale scraping projects and require some programming knowledge to integrate with their APIs.

Custom-Coded Scrapers

For highly customized scraping needs, developers can create custom-coded page scrapers using programming languages like Python (with libraries like Beautiful Soup and Scrapy) or Node.js (with libraries like Cheerio and Puppeteer). This approach provides the most flexibility and control over the scraping process but requires significant programming expertise. [See also: Python Web Scraping Tutorial]

Applications of Page Scrapers

Page scrapers have a wide range of applications across various industries:

- E-commerce: Monitoring competitor pricing, tracking product availability, and gathering product reviews.

- Marketing: Collecting leads, analyzing customer sentiment, and tracking brand mentions.

- Research: Gathering data for academic research, market analysis, and trend forecasting.

- Finance: Monitoring stock prices, tracking economic indicators, and gathering financial news.

- Real Estate: Collecting property listings, tracking rental prices, and analyzing market trends.

- News Aggregation: Gathering news articles from various sources and creating customized news feeds.

Ethical Considerations and Legal Aspects

While page scraping can be a powerful tool, it’s crucial to use it ethically and legally. Here are some key considerations:

- Respect Robots.txt: The robots.txt file specifies which parts of a website should not be accessed by bots. Always respect these directives.

- Avoid Overloading Servers: Send requests at a reasonable rate to avoid overloading the target website’s servers. Implement delays and throttling mechanisms.

- Comply with Terms of Service: Carefully review the website’s terms of service to ensure that scraping is permitted. Some websites explicitly prohibit scraping.

- Respect Copyright and Intellectual Property: Do not scrape copyrighted content or data that is protected by intellectual property laws.

- Data Privacy: Be mindful of personal data and comply with data privacy regulations, such as GDPR and CCPA. Avoid scraping sensitive personal information without consent.

Best Practices for Effective Page Scraping

To ensure successful and ethical page scraping, follow these best practices:

- Start Small: Begin with a small-scale scraping project to test your code and ensure that it works correctly.

- Use Proxies: Rotate IP addresses using proxies to avoid being blocked by the target website.

- Implement Error Handling: Implement robust error handling to gracefully handle unexpected errors and prevent your scraper from crashing.

- Use User Agents: Set a realistic user agent to mimic a web browser and avoid being identified as a bot.

- Monitor Your Scraper: Regularly monitor your scraper to ensure that it is working correctly and that the data being extracted is accurate.

- Be Prepared to Adapt: Websites change frequently, so be prepared to adapt your scraper to changes in the website’s structure.

Tools and Technologies for Page Scraping

Several tools and technologies can be used for page scraping, each with its own advantages and disadvantages:

- Python: A versatile programming language with powerful libraries like Beautiful Soup, Scrapy, and Selenium.

- Beautiful Soup: A Python library for parsing HTML and XML documents. It provides a simple way to navigate and search the HTML structure.

- Scrapy: A Python framework for building web scrapers and crawlers. It provides a robust and scalable architecture for handling complex scraping tasks.

- Selenium: A web automation framework that can be used to interact with web pages like a real user. It’s useful for scraping dynamic websites that rely heavily on JavaScript.

- Node.js: A JavaScript runtime environment that can be used for building web scrapers with libraries like Cheerio and Puppeteer.

- Cheerio: A fast and flexible HTML parsing library for Node.js. It provides a jQuery-like syntax for selecting and manipulating HTML elements.

- Puppeteer: A Node.js library that provides a high-level API for controlling headless Chrome or Chromium. It’s useful for scraping dynamic websites and generating screenshots.

Overcoming Anti-Scraping Measures

Many websites employ anti-scraping measures to prevent bots from accessing their data. These measures can include IP blocking, CAPTCHAs, and dynamic content rendering. Here are some techniques for overcoming these challenges:

- IP Rotation: Use a pool of proxy servers to rotate IP addresses and avoid being blocked.

- CAPTCHA Solving: Integrate CAPTCHA solving services to automatically solve CAPTCHAs.

- Headless Browsers: Use headless browsers like Puppeteer or Selenium to render dynamic content and interact with web pages like a real user.

- User Agent Rotation: Rotate user agent strings to mimic different web browsers and avoid being identified as a bot.

- Request Throttling: Implement delays and throttling mechanisms to reduce the request rate and avoid overloading the target website’s servers.

The Future of Page Scraping

As the web continues to evolve, page scraping will likely become even more sophisticated. Machine learning and artificial intelligence are being increasingly used to enhance scraping techniques, such as identifying relevant data and handling complex website structures. Additionally, the legal and ethical considerations surrounding web scraping will continue to be debated and refined. Staying informed about the latest trends and best practices in page scraping is essential for anyone looking to leverage web data effectively and responsibly. The use of page scrapers will continue to grow as businesses seek to gain a competitive edge through data analysis. Understanding the tools and techniques available is crucial for success in this evolving landscape. [See also: Web Scraping Legal Considerations]

Conclusion

Page scrapers are powerful tools for extracting data from websites, offering a wide range of applications across various industries. By understanding how they work, the different types available, and the ethical considerations involved, you can leverage page scrapers to gain valuable insights from online data. Whether you’re a researcher, marketer, or business professional, mastering the art of page scraping can provide a significant competitive advantage in today’s data-driven world. Remember to always use these tools responsibly and ethically, respecting the terms of service and robots.txt files of the websites you are scraping. The power of a page scraper lies not only in its ability to collect data but also in the responsible and ethical manner in which it is used.