Mastering Website Scraping with cURL: A Comprehensive Guide

In today’s data-driven world, the ability to extract information from websites is a valuable skill. While various tools and libraries exist for this purpose, curl, a command-line tool, offers a powerful and flexible solution for curl scrape website tasks. This comprehensive guide will delve into the intricacies of using curl for website scraping, covering everything from basic usage to advanced techniques. We’ll explore how to fetch website content, handle different data formats, and address common challenges encountered during the scraping process. If you’re looking to efficiently curl scrape website data, this article provides a solid foundation.

Understanding cURL and Its Role in Web Scraping

curl (Client URL) is a command-line tool and library for transferring data with URLs. It supports various protocols, including HTTP, HTTPS, FTP, and more, making it incredibly versatile. While not specifically designed for web scraping, its ability to fetch web page content makes it a fundamental building block for many web scraping applications. Understanding the core functionality of curl is essential before attempting to curl scrape website data.

Why Use cURL for Web Scraping?

- Ubiquity:

curlis pre-installed on most Unix-like operating systems (Linux, macOS) and is readily available for Windows. - Flexibility: It offers a wide range of options for customizing requests, including setting headers, cookies, and authentication credentials.

- Scriptability:

curlcan be easily integrated into shell scripts and other programs, allowing for automated scraping tasks. - Performance: For simple tasks,

curlcan be very efficient in terms of resource usage.

Basic cURL Usage for Fetching Website Content

The most basic use of curl involves fetching the HTML content of a website. The syntax is straightforward:

curl [options] <URL>For example, to fetch the HTML content of example.com, you would use the following command:

curl https://www.example.comThis command will output the HTML source code of the website directly to your terminal. To save the output to a file, you can use the -o option:

curl -o output.html https://www.example.comThis will save the HTML content to a file named output.html. This is the first step in your journey to effectively curl scrape website data.

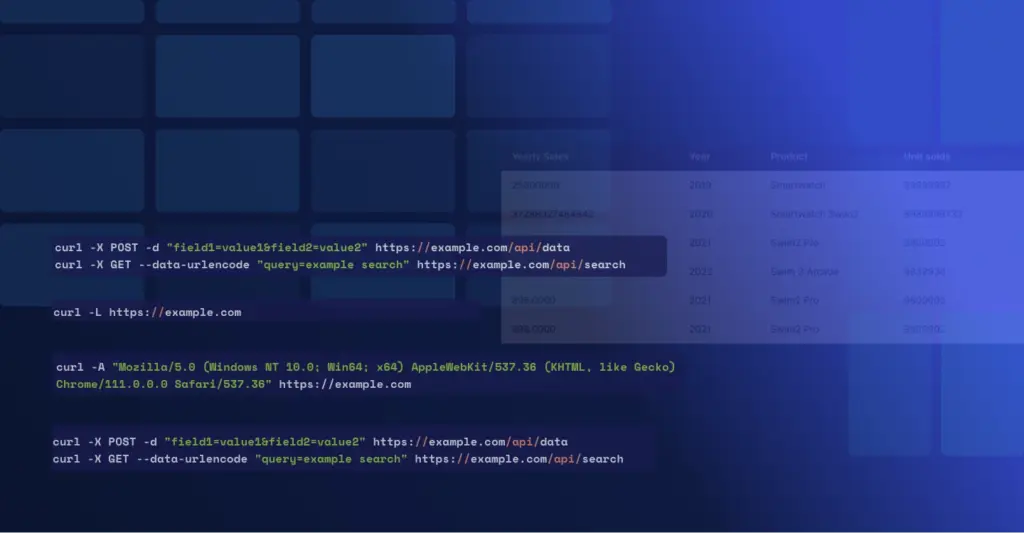

Advanced cURL Options for Web Scraping

While basic usage is helpful, curl‘s true power lies in its advanced options. These options allow you to customize your requests to mimic a web browser, handle cookies, and authenticate with websites.

Setting User-Agent

Many websites block or throttle requests from scripts that don’t identify themselves as a legitimate web browser. The -A option allows you to set the User-Agent header:

curl -A "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36" https://www.example.comThis makes your request appear as if it’s coming from a Chrome browser on Windows. Setting the User-Agent is a crucial step when you curl scrape website data, as it helps avoid being blocked.

Handling Cookies

Cookies are used by websites to track user sessions and preferences. To handle cookies with curl, you can use the -b and -c options.

-b <cookie-string/file>: Sends cookies to the server. You can either provide a cookie string directly or specify a file containing cookies.-c <cookie-file>: Saves cookies received from the server to a file.

For example, to save cookies to a file named cookies.txt, you would use:

curl -c cookies.txt https://www.example.comTo send those cookies in subsequent requests, you would use:

curl -b cookies.txt https://www.example.comProper cookie management is often necessary to successfully curl scrape website data from sites requiring authentication or session tracking.

Authentication

Some websites require authentication before you can access their content. curl supports various authentication methods, including basic authentication and digest authentication.

To use basic authentication, you can use the -u option:

curl -u username:password https://www.example.com/protectedReplace username and password with your actual credentials. For more complex authentication schemes, you may need to use other curl options or libraries that handle authentication protocols.

Parsing and Extracting Data from HTML

Once you’ve fetched the HTML content of a website using curl, the next step is to parse the HTML and extract the data you need. curl itself doesn’t provide built-in HTML parsing capabilities. Therefore, you’ll typically need to combine it with other tools or programming languages.

Using grep, sed, and awk

For simple data extraction tasks, you can use command-line tools like grep, sed, and awk to search for specific patterns in the HTML content. However, these tools are not suitable for parsing complex HTML structures.

Combining cURL with Programming Languages

A more robust approach is to use a programming language like Python, Ruby, or PHP, along with an HTML parsing library like Beautiful Soup (Python), Nokogiri (Ruby), or DOMDocument (PHP). These libraries provide powerful tools for navigating and extracting data from HTML documents.

Example (Python with Beautiful Soup):

import subprocess

from bs4 import BeautifulSoup

url = "https://www.example.com"

# Fetch HTML content using curl

process = subprocess.Popen(['curl', url], stdout=subprocess.PIPE, stderr=subprocess.PIPE)

stdout, stderr = process.communicate()

html_content = stdout.decode('utf-8')

# Parse HTML with Beautiful Soup

soup = BeautifulSoup(html_content, 'html.parser')

# Extract all links

for link in soup.find_all('a'):

print(link.get('href'))

This Python script uses subprocess to execute curl, fetches the HTML content, and then uses Beautiful Soup to parse the HTML and extract all the links. This approach allows you to curl scrape website data and then process it in a structured way.

Handling JavaScript-Rendered Content

Many modern websites use JavaScript to dynamically generate content. curl, by itself, cannot execute JavaScript. This means that if the data you need is rendered by JavaScript, curl will only fetch the initial HTML source code, which may not contain the desired content.

Using Headless Browsers

To scrape JavaScript-rendered content, you need to use a headless browser like Puppeteer (Node.js) or Selenium. Headless browsers can execute JavaScript and render the page, allowing you to scrape the dynamically generated content.

Example (Node.js with Puppeteer):

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://www.example.com');

// Wait for JavaScript to render the content

await page.waitForSelector('#content-loaded-by-js');

// Extract the content

const content = await page.$eval('#content-loaded-by-js', el => el.textContent);

console.log(content);

await browser.close();

})();

This Node.js script uses Puppeteer to launch a headless Chrome browser, navigates to the website, waits for the JavaScript to render the content, and then extracts the content using a CSS selector. Using a headless browser is essential when you need to curl scrape website data that relies heavily on JavaScript.

Respecting Website Terms of Service and Robots.txt

Before you start scraping any website, it’s crucial to respect the website’s terms of service and robots.txt file. The robots.txt file is a text file that tells web robots (like web scrapers) which parts of the website should not be crawled.

You can usually find the robots.txt file at the root of the website (e.g., https://www.example.com/robots.txt). It’s important to adhere to the rules specified in the robots.txt file to avoid overloading the website’s servers or violating their terms of service. Ignoring these rules can lead to your IP address being blocked. Responsible scraping is paramount when you curl scrape website data.

Common Challenges and Solutions

Web scraping can be challenging due to various factors, such as:

- IP Blocking: Websites may block your IP address if they detect excessive requests from a single source.

- Rate Limiting: Websites may limit the number of requests you can make within a certain time period.

- Dynamic Content: As mentioned earlier, JavaScript-rendered content can be difficult to scrape.

- Website Structure Changes: Websites may change their structure, breaking your scraper.

Solutions

- Use Proxies: Rotate your IP address by using a proxy service.

- Implement Rate Limiting: Add delays between requests to avoid overloading the website’s servers.

- Use Headless Browsers: For JavaScript-rendered content.

- Monitor and Adapt: Regularly monitor your scraper and adapt it to changes in the website’s structure.

Conclusion

curl is a powerful tool for fetching website content and forms the basis of many web scraping workflows. By understanding its basic and advanced options, you can effectively curl scrape website data. However, remember to respect website terms of service, handle JavaScript-rendered content appropriately, and address common challenges like IP blocking and rate limiting. Combining curl with programming languages and HTML parsing libraries allows you to extract and process data in a structured way. With careful planning and execution, you can leverage curl to gather valuable information from the web. Remember to always prioritize ethical and responsible scraping practices.

[See also: Web Scraping with Python]

[See also: Ethical Web Scraping Practices]

[See also: Using Proxies for Web Scraping]