Define Web Crawling: A Comprehensive Guide to Understanding the Process

In today’s digital landscape, information is king. Search engines like Google, Bing, and others rely heavily on a process called web crawling to discover, index, and rank the vast amount of content available online. But what exactly does it mean to define web crawling? This article provides a comprehensive overview of web crawling, covering its definition, how it works, its applications, and the ethical considerations involved.

What is Web Crawling?

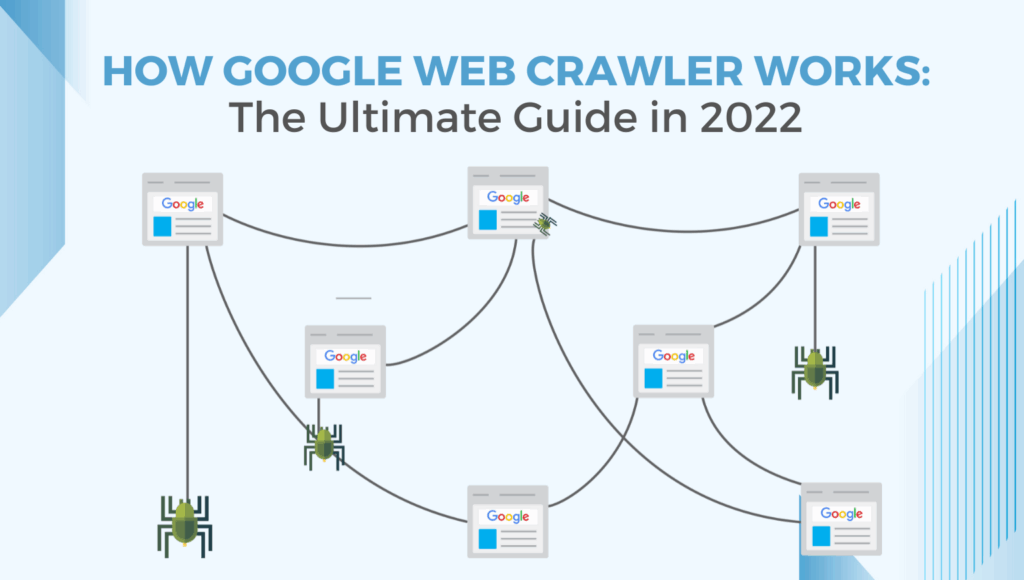

To define web crawling simply, it’s an automated process where a program systematically browses the World Wide Web. This program, often referred to as a web crawler, spider, bot, or simply a crawler, follows links from one webpage to another, collecting information along the way. Think of it as a digital librarian, meticulously cataloging the internet’s ever-expanding collection of resources.

The primary purpose of web crawling is to discover and index web content. This allows search engines to provide relevant and up-to-date search results to users. Without web crawling, search engines would be unable to effectively navigate and understand the web, making it difficult for users to find the information they need.

How Web Crawling Works: A Step-by-Step Process

The process of web crawling can be broken down into several key steps:

- Initialization: The crawler starts with a list of URLs known as the ‘seed URLs’. These URLs serve as the starting point for the crawling process.

- Downloading: The crawler fetches the HTML content of the seed URLs.

- Parsing: The crawler parses the HTML content to extract valuable information, including text, images, and, most importantly, links to other webpages.

- Indexing: The extracted information is then indexed, allowing the search engine to efficiently retrieve and rank the content later.

- Link Extraction: The crawler extracts all the hyperlinks found on the downloaded webpage.

- URL Prioritization: The crawler prioritizes the extracted URLs based on various factors, such as website authority, relevance, and update frequency.

- Recursion: The crawler repeats steps 2-6 for the prioritized URLs, effectively traversing the web.

- Robots.txt Compliance: Throughout the process, crawlers adhere to the rules defined in the `robots.txt` file of each website, which specifies which parts of the site should not be crawled. This is crucial for ethical and responsible web crawling.

Key Components of a Web Crawler

Several key components work together to make web crawling possible:

- Crawler Policy: Defines the rules and guidelines that the crawler must follow. This includes aspects like politeness (avoiding overloading servers), freshness (updating indexed content regularly), and avoiding traps (preventing the crawler from getting stuck in infinite loops).

- URL Frontier: A data structure that stores the list of URLs to be crawled. The frontier prioritizes URLs based on various factors to optimize the crawling process.

- Fetcher: Responsible for downloading the content of webpages.

- Parser: Extracts information from the downloaded HTML content.

- Indexer: Stores the extracted information in an organized manner, making it easily searchable.

Applications of Web Crawling

Web crawling has numerous applications beyond search engine indexing. Here are a few examples:

- Search Engine Optimization (SEO): SEO professionals use web crawlers to analyze websites, identify technical issues, and optimize content for better search engine rankings.

- Data Mining: Crawlers can be used to collect large datasets from the web for research, analysis, and business intelligence.

- Price Monitoring: E-commerce businesses use crawlers to track competitor prices and adjust their own pricing strategies accordingly.

- News Aggregation: News aggregators use crawlers to collect news articles from various sources and present them in a centralized location.

- Website Archiving: Organizations like the Internet Archive use crawlers to preserve snapshots of websites for historical purposes.

- Content Monitoring: Crawlers can be used to monitor websites for specific keywords or phrases, which can be useful for brand monitoring or identifying copyright infringement.

Ethical Considerations and Best Practices

While web crawling is a powerful tool, it’s important to use it responsibly and ethically. Here are some key considerations:

- Respect Robots.txt: Always adhere to the rules defined in the `robots.txt` file. This file specifies which parts of the website should not be crawled.

- Be Polite: Avoid overloading servers with excessive requests. Implement delays between requests to minimize the impact on website performance.

- Identify Your Crawler: Clearly identify your crawler in the User-Agent header. This allows website administrators to identify and contact you if necessary.

- Avoid Crawling Sensitive Data: Be mindful of the data you are collecting and avoid crawling sensitive or private information.

- Obey Legal Restrictions: Be aware of and comply with all applicable laws and regulations regarding data collection and privacy.

The Future of Web Crawling

Web crawling continues to evolve alongside the internet. As the web becomes more dynamic and complex, crawlers are adapting to handle new challenges. Some key trends in web crawling include:

- JavaScript Rendering: Modern websites often rely heavily on JavaScript to generate content. Crawlers are increasingly incorporating JavaScript rendering capabilities to accurately index these websites.

- AI-Powered Crawling: Artificial intelligence (AI) is being used to improve the efficiency and accuracy of web crawling. AI can be used to prioritize URLs, identify relevant content, and detect crawling traps.

- Decentralized Crawling: Decentralized web crawling systems are emerging, which distribute the crawling workload across multiple machines, improving scalability and resilience.

Conclusion

Web crawling is a fundamental process that underpins search engines and many other online applications. By understanding how web crawling works, its applications, and the ethical considerations involved, you can gain a deeper appreciation for the complexities of the modern web. Defining web crawling is just the first step; responsible and informed use is key to harnessing its power for good. [See also: How Search Engines Work] [See also: The Importance of Robots.txt] [See also: Ethical Web Scraping Practices]

From search engine optimization to data mining, the applications of web crawling are vast and continue to expand. As the web evolves, so too will the techniques and technologies used in web crawling, ensuring that we can continue to navigate and understand the ever-growing digital landscape. Web crawling remains a critical tool for accessing and organizing the world’s information. Understanding how to define web crawling and its impact is increasingly important in our data-driven world.