How to Extract Data from Websites Online: A Comprehensive Guide

In today’s data-driven world, the ability to extract data from websites online is a crucial skill for businesses, researchers, and individuals alike. Web scraping, as it’s often called, allows you to gather valuable information from the vast expanse of the internet, turning unstructured web content into structured, usable data. This comprehensive guide will explore the various methods, tools, and considerations involved in effectively and ethically extracting data from websites online.

Understanding Web Scraping and Its Applications

Web scraping is the automated process of collecting data from websites. Instead of manually copying and pasting information, web scraping tools automate this process, saving significant time and effort. The extracted data can then be used for a variety of purposes, including:

- Market Research: Analyzing competitor pricing, product features, and customer reviews.

- Lead Generation: Identifying potential customers and gathering their contact information.

- Financial Analysis: Tracking stock prices, economic indicators, and market trends.

- News Aggregation: Compiling news articles from various sources into a single platform.

- Academic Research: Collecting data for social science studies, linguistic analysis, and more.

- Real Estate Analysis: Monitoring property listings, prices, and market trends.

The ability to extract data from websites online opens up a world of possibilities for informed decision-making and strategic planning.

Methods for Extracting Data from Websites Online

There are several methods for extracting data from websites online, each with its own advantages and disadvantages:

Manual Copy-Pasting

The simplest method is manual copy-pasting. While suitable for small amounts of data, it’s time-consuming and impractical for large-scale projects. This method is not scalable and is prone to human error.

Web Scraping Tools

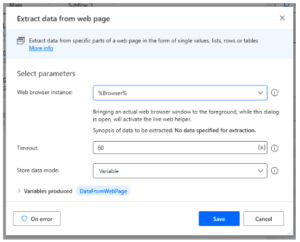

Web scraping tools automate the process of extracting data from websites online. These tools range from browser extensions to desktop applications and cloud-based platforms. They typically allow you to specify the data you want to extract by selecting elements on the web page or defining CSS selectors or XPath expressions.

Popular Web Scraping Tools:

- ParseHub: A user-friendly web scraping tool with a visual interface.

- Octoparse: A powerful web scraping platform with advanced features like IP rotation and scheduling.

- Scrapy: A Python-based framework for building custom web scrapers. [See also: Python Web Scraping Tutorial]

- Beautiful Soup: A Python library for parsing HTML and XML documents. Often used in conjunction with Scrapy.

- Apify: A cloud-based web scraping platform that offers a variety of pre-built scrapers and custom solutions.

APIs (Application Programming Interfaces)

Many websites offer APIs that allow developers to access their data in a structured format. Using an API is often the preferred method for extracting data from websites online, as it’s more reliable and efficient than web scraping. However, not all websites offer APIs, and those that do may have usage restrictions or require authentication.

When available, APIs provide a clean and structured way to extract data from websites online, eliminating the need to parse HTML or XML. [See also: Understanding APIs for Data Extraction]

Headless Browsers

Headless browsers, such as Puppeteer and Selenium, allow you to automate browser actions and extract data from websites online that rely heavily on JavaScript. These tools simulate a real user browsing the website, allowing you to interact with dynamic content and extract data that wouldn’t be accessible through traditional web scraping methods.

Headless browsers are particularly useful for extracting data from websites online that use AJAX or single-page applications.

Ethical Considerations and Legal Implications

While extracting data from websites online can be a valuable tool, it’s important to consider the ethical and legal implications. Always respect the website’s terms of service and robots.txt file, which specifies which parts of the website should not be scraped. Avoid overwhelming the website with requests, as this can cause performance issues or even denial-of-service. Furthermore, be mindful of copyright laws and privacy regulations.

Before you extract data from websites online, review the following:

- Terms of Service: Understand the website’s rules regarding data scraping.

- Robots.txt: Respect the website’s instructions on which pages to avoid.

- Rate Limiting: Avoid making too many requests in a short period of time.

- Data Privacy: Be careful when handling personal data and comply with relevant privacy regulations like GDPR and CCPA.

Failure to comply with these guidelines can result in legal action or being blocked from accessing the website.

Best Practices for Web Scraping

To ensure that your web scraping efforts are successful and ethical, follow these best practices:

- Identify Your Target Data: Clearly define what data you need to extract before you start scraping.

- Use a Robust Scraping Tool: Choose a tool that suits your needs and offers features like IP rotation and error handling.

- Handle Errors Gracefully: Implement error handling to deal with unexpected situations, such as broken links or changes in website structure.

- Rotate IP Addresses: Use IP rotation to avoid being blocked by the website.

- Use User Agents: Mimic a real user by setting a user agent in your scraper.

- Respect Rate Limits: Avoid making too many requests in a short period of time.

- Store Data Efficiently: Choose a suitable data storage format, such as CSV, JSON, or a database.

- Monitor Your Scraper: Regularly monitor your scraper to ensure that it’s working correctly and that the website structure hasn’t changed.

By following these best practices, you can extract data from websites online effectively and ethically.

Advanced Techniques for Data Extraction

For more complex web scraping scenarios, consider using these advanced techniques:

Proxies and IP Rotation

Using proxies and IP rotation is essential for avoiding IP bans. Proxies act as intermediaries between your scraper and the target website, hiding your real IP address. IP rotation involves switching between multiple proxies to further reduce the risk of being blocked.

CAPTCHA Solving

Some websites use CAPTCHAs to prevent automated scraping. CAPTCHA solving services can automatically solve these challenges, allowing your scraper to continue extracting data.

JavaScript Rendering

Websites that rely heavily on JavaScript may require you to render the page before extracting data. Headless browsers like Puppeteer and Selenium can be used to render JavaScript and extract the dynamic content.

Machine Learning for Data Cleaning

The data you extract from websites online may not always be clean and consistent. Machine learning techniques can be used to clean and normalize the data, improving its quality and usability.

Choosing the Right Tool for the Job

The best tool for extracting data from websites online depends on your specific needs and technical skills. If you’re a beginner, a user-friendly tool like ParseHub or Octoparse might be a good choice. If you’re a developer, Scrapy and Beautiful Soup offer more flexibility and control. If you need to scrape dynamic websites, headless browsers like Puppeteer and Selenium are essential.

Consider the following factors when choosing a web scraping tool:

- Ease of Use: How easy is the tool to learn and use?

- Features: Does the tool offer the features you need, such as IP rotation and error handling?

- Scalability: Can the tool handle large-scale scraping projects?

- Price: How much does the tool cost?

- Support: Does the tool offer good customer support?

Conclusion

Extracting data from websites online is a powerful technique for gathering valuable information from the internet. By understanding the various methods, tools, and ethical considerations involved, you can effectively and responsibly harness the power of web scraping. Whether you’re a business professional, researcher, or individual, the ability to extract data from websites online can provide you with a competitive edge and unlock new opportunities. Remember to always prioritize ethical practices and respect the terms of service of the websites you are scraping. As technology evolves, so too will the methods for extracting data from websites online. Staying informed and adapting to these changes will be crucial for continued success in this dynamic field. The process of extracting data from websites online requires careful planning and execution, but the rewards can be significant. By following the guidelines and best practices outlined in this guide, you can confidently embark on your data extraction journey and unlock the wealth of information available on the web. Always remember to validate the information you extract data from websites online with other sources whenever possible.