Is JSON Parse Expensive? Understanding Performance Implications

In modern web development and data exchange, JSON (JavaScript Object Notation) has become a ubiquitous format. Its human-readable structure and ease of use have made it a favorite for APIs, configuration files, and data serialization. However, a question often arises: is JSON parse expensive? Understanding the performance implications of JSON parsing is crucial for optimizing applications and ensuring a smooth user experience. This article delves into the factors that influence JSON parsing performance, offering insights and strategies for efficient data handling.

What is JSON Parsing?

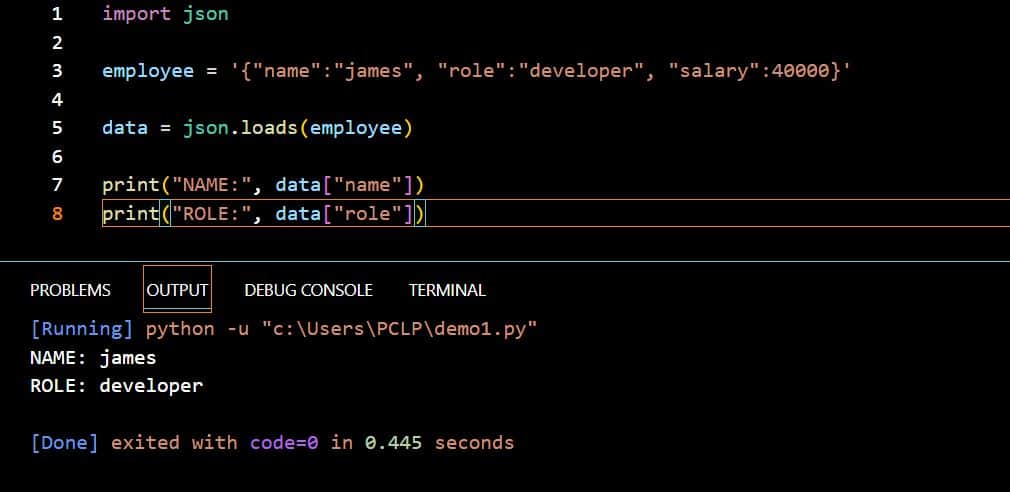

JSON parsing is the process of converting a JSON string into a data structure that can be used by a programming language. For example, a JSON string representing an object might be converted into a JavaScript object, a Python dictionary, or a Java object. This process involves reading the string, validating its syntax, and constructing the corresponding data representation in memory. The efficiency of this process can significantly impact application performance, especially when dealing with large or complex JSON documents.

Factors Affecting JSON Parsing Performance

Several factors can influence how expensive JSON parse operations are. Understanding these factors is the first step in optimizing JSON handling within your applications.

Size and Complexity of the JSON Document

The size of the JSON document is a primary factor. Larger JSON files naturally take longer to parse. Complexity also matters. A deeply nested JSON structure with many arrays and objects will generally be more computationally intensive to parse than a simple, flat JSON structure. The deeper the nesting and the more numerous the elements, the more resources are needed to traverse and represent the data.

Parsing Library or Implementation

The choice of parsing library can significantly impact performance. Different libraries employ different algorithms and optimization techniques. Some libraries are designed for speed, while others prioritize memory usage or features. For example, native browser JSON parsers are often highly optimized, while third-party libraries may offer more flexibility but at a potential performance cost. Benchmarking different libraries with representative JSON data is essential to identify the most efficient option for a given use case. [See also: Comparing JSON Parsing Libraries in JavaScript]

Hardware and System Resources

The underlying hardware and system resources also play a role. Parsing a large JSON file on a low-powered device with limited memory will naturally take longer than on a high-performance server. CPU speed, memory availability, and disk I/O speed can all influence parsing time. When designing applications that handle large JSON payloads, it’s important to consider the target hardware and ensure that it has sufficient resources to handle the parsing load.

Number of Parsing Operations

The frequency with which JSON parse operations are performed can also contribute to overall performance impact. If an application frequently parses large JSON files, the cumulative cost can become significant. Caching parsed JSON data or using more efficient data formats can help mitigate this issue. Reducing unnecessary parsing operations is a key strategy for improving performance.

Strategies for Optimizing JSON Parsing

Fortunately, there are several strategies to minimize the performance impact of JSON parse operations. These strategies range from choosing the right tools and techniques to optimizing the data itself.

Use Efficient Parsing Libraries

Selecting the right parsing library is crucial. Many languages offer multiple JSON parsing libraries, each with its own performance characteristics. Research and benchmark different libraries to find the one that best suits your needs. For example, in JavaScript, the built-in JSON.parse() is often the fastest option for simple parsing. In Python, libraries like orjson are known for their speed. [See also: Benchmarking JSON Parsers in Python]

Minimize JSON Size

Reducing the size of the JSON document can significantly improve parsing performance. This can be achieved by:

- Removing unnecessary whitespace.

- Using shorter keys.

- Avoiding redundant data.

- Compressing the JSON data using techniques like gzip or Brotli.

Tools exist to minify JSON, removing whitespace and comments without affecting the data. Compressing JSON before transmission can also reduce the amount of data that needs to be parsed, leading to faster processing times.

Stream Parsing

For very large JSON files, stream parsing can be a more efficient approach. Instead of loading the entire file into memory at once, stream parsing processes the JSON data in chunks. This reduces memory usage and can improve parsing time, especially when only a portion of the data is needed. Libraries like jq and some database systems offer stream parsing capabilities. This technique is especially useful when dealing with data streams or very large datasets that exceed available memory.

Caching Parsed Data

If the same JSON data is parsed repeatedly, caching the parsed result can avoid redundant parsing operations. This can be implemented using in-memory caches or more sophisticated caching systems. Before parsing, check if the data is already in the cache. If so, retrieve the cached result instead of parsing again. This approach can dramatically improve performance in scenarios where data is frequently accessed but rarely changes.

Use Alternative Data Formats

In some cases, using a different data format altogether may be a better option. Formats like Protocol Buffers or Apache Avro are designed for efficient serialization and deserialization and may offer better performance than JSON, especially for large or complex data structures. However, switching to a different format may require changes to both the client and server sides of the application. Consider the trade-offs between performance and compatibility when choosing a data format. [See also: JSON vs. Protocol Buffers: A Performance Comparison]

Web Workers (for JavaScript)

In web applications, parsing large JSON files in the main thread can block the user interface, leading to a poor user experience. Web Workers allow you to offload the parsing task to a separate thread, preventing the main thread from being blocked. This keeps the UI responsive while the JSON data is being processed. This is particularly important for applications that handle large datasets or perform complex data transformations. The main thread can then receive the parsed data from the Web Worker once the process is complete.

Real-World Examples and Benchmarks

To illustrate the performance implications of JSON parse, let’s consider some real-world examples and benchmarks. A study comparing different JSON parsing libraries in JavaScript found that native JSON.parse() was consistently faster than most third-party libraries for simple parsing tasks. However, libraries like fast-json-stringify were more efficient for serializing complex objects. In Python, orjson has demonstrated significant performance gains over the standard json library, especially for large JSON documents.

In a typical web application scenario, parsing a 1MB JSON file might take several hundred milliseconds using a naive approach. By using an optimized parsing library and compressing the JSON data, this time could be reduced to tens of milliseconds. This can have a noticeable impact on the perceived performance of the application, especially on mobile devices or networks with limited bandwidth.

Conclusion

While JSON parse can be expensive, especially when dealing with large or complex documents, there are many strategies to mitigate its performance impact. Choosing the right parsing library, minimizing JSON size, using stream parsing, caching parsed data, and considering alternative data formats are all effective ways to optimize JSON handling. By understanding the factors that influence parsing performance and implementing appropriate optimization techniques, developers can ensure that their applications remain responsive and efficient, even when dealing with large amounts of JSON data. Understanding when is JSON parse expensive is key to building performant applications.