Mastering the Web: A Comprehensive Guide to Sample Web Crawlers

In today’s data-driven world, the ability to efficiently extract information from the vast expanse of the internet is paramount. This is where sample web crawlers come into play. A sample web crawler, also known as a spider or bot, is an automated program that systematically browses the World Wide Web, collecting data from websites. This comprehensive guide will delve into the intricacies of sample web crawlers, exploring their functionality, applications, development, and ethical considerations.

What is a Web Crawler?

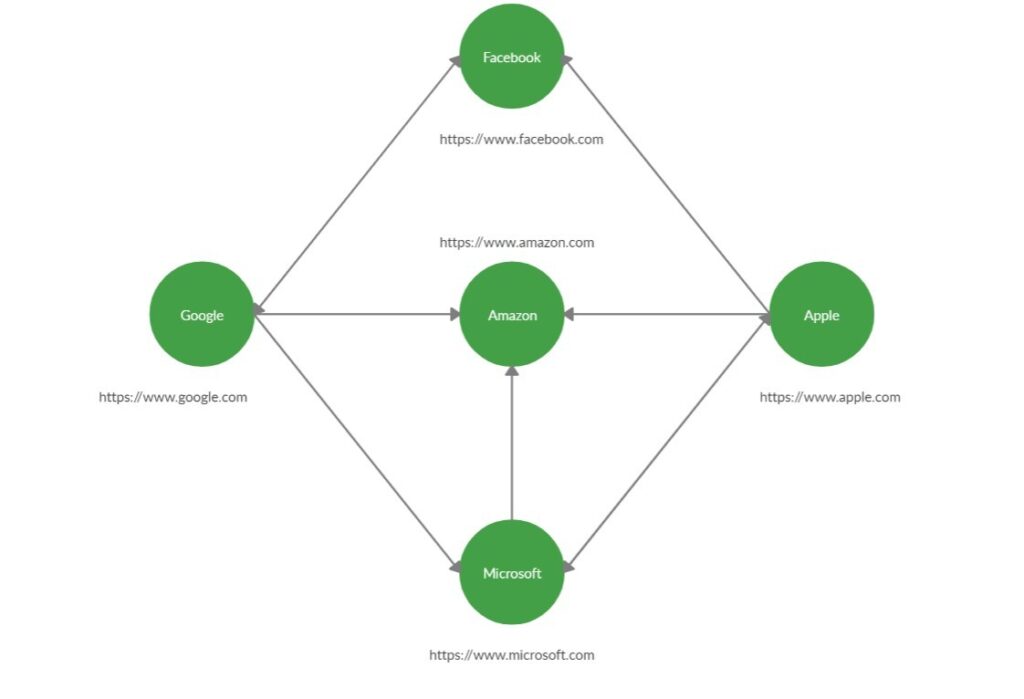

At its core, a web crawler is a software application designed to navigate the internet in an organized and automated manner. It starts with a list of URLs to visit, called the seed URLs. The crawler then visits these URLs, identifies all the hyperlinks on the page, and adds them to a queue of URLs to be visited later. This process continues recursively, allowing the crawler to explore a significant portion of the web.

The primary purpose of a sample web crawler is to gather information. This information can range from the content of web pages to metadata such as page titles, descriptions, and keywords. The collected data is then typically stored in a database or other structured format for further analysis and use. The ability to collect this data efficiently makes sample web crawlers invaluable tools for various applications.

How Web Crawlers Work

The operation of a sample web crawler can be broken down into several key steps:

- Initialization: The crawler starts with a set of seed URLs.

- Fetching: The crawler retrieves the content of a URL from the web server.

- Parsing: The fetched content (typically HTML) is parsed to extract relevant information, such as text, images, and links.

- Link Extraction: All hyperlinks on the page are identified and added to the crawl queue.

- Filtering: The crawler applies filtering rules to determine which URLs in the crawl queue should be visited. This might involve excluding URLs based on domain, file type, or other criteria.

- Storage: The extracted data is stored in a database or other storage system.

- Iteration: The crawler repeats steps 2-6 until it has visited a sufficient number of pages or reached a predefined stopping point.

Effective sample web crawlers must be designed to be robust, efficient, and respectful of website resources. They must handle errors gracefully, avoid overloading web servers, and adhere to the rules specified in the website’s robots.txt file. [See also: Understanding Robots.txt and Web Crawler Etiquette]

Applications of Web Crawlers

Sample web crawlers have a wide range of applications across various industries and domains. Some of the most common applications include:

- Search Engine Indexing: Search engines like Google and Bing use web crawlers extensively to index the content of the web. These crawlers visit billions of pages, extracting text and metadata to build a searchable index.

- Data Mining: Web crawlers can be used to collect data for research, analysis, and business intelligence. For example, they can be used to monitor prices on e-commerce websites, track social media trends, or gather information about competitors.

- Content Aggregation: News aggregators and other content platforms use web crawlers to collect articles and other content from various sources.

- SEO Auditing: Web crawlers can be used to analyze websites for SEO issues, such as broken links, missing meta descriptions, and duplicate content.

- Website Archiving: Organizations like the Internet Archive use web crawlers to create archives of websites, preserving them for future reference.

- Monitoring and Alerting: Sample web crawlers can be configured to monitor websites for changes and send alerts when specific events occur, such as a price drop or a new product launch.

Developing a Sample Web Crawler

Developing a sample web crawler from scratch can be a complex task, but it is also a valuable learning experience. There are several programming languages and libraries that can be used for web crawling, including Python, Java, and Node.js.

Here’s a simplified overview of the steps involved in building a basic web crawler in Python using the `requests` and `BeautifulSoup4` libraries:

- Install Libraries: Install the necessary libraries using pip:

pip install requests beautifulsoup4 - Fetch the Web Page: Use the `requests` library to fetch the content of a URL.

- Parse the HTML: Use the `BeautifulSoup4` library to parse the HTML content and extract the desired data.

- Extract Links: Identify all the hyperlinks on the page and add them to the crawl queue.

- Implement Filtering: Apply filtering rules to determine which URLs to visit.

- Store Data: Store the extracted data in a database or file.

- Handle Errors: Implement error handling to gracefully handle issues such as network errors and invalid HTML.

A sample web crawler implementation can be as simple or as complex as needed. For more sophisticated crawlers, consider using frameworks like Scrapy, which provides a robust and scalable platform for web crawling. [See also: Choosing the Right Web Crawling Framework]

Ethical Considerations

While web crawling can be a powerful tool, it is important to consider the ethical implications of your activities. Web crawlers can consume significant resources on web servers, and poorly designed crawlers can even cause websites to crash. It is crucial to design your crawler to be respectful of website resources and to comply with the website’s terms of service and robots.txt file.

Here are some ethical considerations to keep in mind when developing and using web crawlers:

- Respect Robots.txt: Always check the website’s robots.txt file before crawling and adhere to the rules specified in the file. This file tells crawlers which parts of the website they are allowed to access.

- Avoid Overloading Servers: Design your crawler to avoid making too many requests to a website in a short period of time. Implement delays and concurrency limits to prevent overloading the server.

- Identify Your Crawler: Include a user-agent string in your crawler’s HTTP requests that identifies your crawler and provides contact information. This allows website administrators to contact you if they have any concerns.

- Respect Copyright: Be mindful of copyright laws when collecting and using data from the web. Do not reproduce copyrighted material without permission.

- Protect Privacy: Be careful not to collect personal information without consent. Comply with privacy regulations such as GDPR and CCPA.

Advanced Web Crawling Techniques

Beyond the basics, there are several advanced techniques that can be used to improve the performance and effectiveness of web crawlers. These techniques include:

- Distributed Crawling: Distributing the crawling process across multiple machines can significantly increase the speed and scale of your crawler.

- Asynchronous Crawling: Using asynchronous programming techniques can allow your crawler to handle multiple requests concurrently, improving efficiency.

- Dynamic Content Crawling: Crawling websites that rely heavily on JavaScript and AJAX requires specialized techniques, such as using headless browsers like Puppeteer or Selenium.

- Machine Learning: Machine learning can be used to improve the accuracy and efficiency of web crawlers. For example, machine learning models can be used to identify and prioritize the most important pages to crawl.

The Future of Web Crawling

As the web continues to evolve, web crawling will become even more important. With the rise of the Semantic Web and the increasing use of structured data, web crawlers will need to be able to extract and process more complex information. The development of AI and machine learning will also play a significant role in the future of web crawling, enabling crawlers to be more intelligent and adaptable.

In conclusion, sample web crawlers are essential tools for extracting information from the web. By understanding the principles of web crawling, developers and researchers can leverage these tools to gather data for a wide range of applications. However, it is crucial to use web crawlers ethically and responsibly, respecting website resources and complying with relevant laws and regulations. The ongoing evolution of the web promises an exciting future for web crawling, with new technologies and techniques constantly emerging to improve their performance and capabilities. A well-designed sample web crawler can unlock a wealth of information, providing valuable insights and driving innovation across various fields.