Power Automate Web Scraping: A Comprehensive Guide

In today’s data-driven world, the ability to extract information from websites is becoming increasingly valuable. Power Automate web scraping provides a robust and efficient way to automate this process, allowing businesses and individuals to gather data for various purposes, from market research to competitive analysis. This comprehensive guide will delve into the intricacies of using Power Automate for web scraping, covering everything from the basics to advanced techniques.

What is Web Scraping?

Web scraping, also known as web harvesting or web data extraction, is the process of automatically extracting data from websites. Instead of manually copying and pasting information, web scraping tools automate this process, allowing you to quickly and efficiently gather large amounts of data. This data can then be used for various purposes, such as:

- Market research

- Competitive analysis

- Lead generation

- Price monitoring

- Data aggregation

Why Use Power Automate for Web Scraping?

Power Automate, formerly known as Microsoft Flow, is a cloud-based service that allows you to automate tasks and workflows. While not specifically designed for web scraping, Power Automate offers several advantages for this purpose:

- Automation: Power Automate allows you to schedule and automate web scraping tasks, ensuring that you always have the latest data.

- Integration: Power Automate integrates seamlessly with other Microsoft services, such as Excel, SharePoint, and Power BI, making it easy to store and analyze the scraped data.

- No-code/Low-code: Power Automate provides a user-friendly interface that allows you to create web scraping workflows without writing complex code.

- Scalability: Power Automate can handle large volumes of data, making it suitable for both small and large-scale web scraping projects.

- Cost-Effective: Power Automate offers a free plan with limited features, as well as paid plans that provide more advanced capabilities. This makes it a cost-effective solution for web scraping, especially for organizations already using Microsoft products.

Understanding Power Automate’s Web Automation Capabilities

Power Automate offers a few different ways to perform web scraping. The most common methods involve using the “UI flows” feature, which allows you to record and replay user interactions on a website. Another approach involves using HTTP requests to directly access the website’s data.

UI Flows

UI flows enable you to automate desktop and web applications by recording your actions. This is particularly useful for web scraping scenarios where you need to interact with a website in a specific way, such as clicking buttons, filling out forms, or navigating through different pages. [See also: Power Automate UI Flows Tutorial]

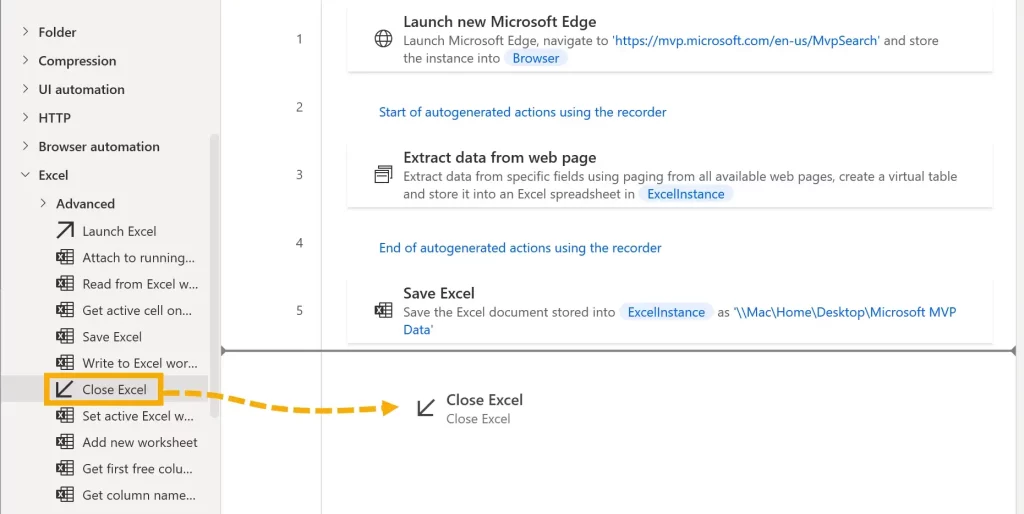

To use UI flows for web scraping, you would typically follow these steps:

- Create a UI flow: Start by creating a new UI flow in Power Automate.

- Record your actions: Record the steps you take to navigate to the desired website and extract the data you need.

- Define inputs and outputs: Specify the inputs that the UI flow requires (e.g., search terms, URLs) and the outputs that it will generate (e.g., scraped data).

- Test your UI flow: Test the UI flow to ensure that it is working correctly.

- Integrate with other Power Automate actions: Integrate the UI flow with other Power Automate actions, such as sending emails, storing data in a database, or updating a spreadsheet.

HTTP Requests

Another way to perform Power Automate web scraping is by using HTTP requests. This approach involves sending HTTP requests to the website’s server and parsing the response to extract the desired data. This method is more technical than using UI flows, but it can be more efficient for simple web scraping tasks.

To use HTTP requests for web scraping, you would typically follow these steps:

- Send an HTTP request: Use the “HTTP” action in Power Automate to send an HTTP request to the website’s URL.

- Parse the response: Use the “Parse JSON” action to parse the JSON response from the website. If the website returns HTML, you may need to use a more advanced parsing technique, such as regular expressions or HTML parsing libraries.

- Extract the data: Extract the desired data from the parsed response.

- Store the data: Store the extracted data in a database, spreadsheet, or other data storage system.

Step-by-Step Guide: Building a Simple Web Scraping Flow

Let’s walk through a simple example of building a web scraping flow using Power Automate. We’ll scrape the title and price of a product from an e-commerce website.

Step 1: Create a New Flow

In Power Automate, click on “Create” and select “Instant cloud flow.” Give your flow a name (e.g., “Product Price Scraper”) and choose the “Manually trigger a flow” trigger.

Step 2: Add an HTTP Action

Click on “New step” and search for “HTTP.” Select the “HTTP” action. Configure the action as follows:

- Method: GET

- URI: The URL of the product page you want to scrape. (e.g., https://www.example.com/product/123)

Step 3: Parse the HTML

Since we’re dealing with HTML, we’ll need to parse it to extract the data. Unfortunately, Power Automate doesn’t have a built-in HTML parser, so we’ll use a workaround. We’ll send the HTML to a service like Apify to parse it. Add another “HTTP” action. This time, we’ll send the HTML to Apify’s HTML parser. You’ll need an Apify account and API key for this step. [See also: Apify Integration with Power Automate]

Configure the action as follows:

- Method: POST

- URI: https://api.apify.com/v2/acts/apify~html-to-json/run-sync?token=YOUR_APIFY_TOKEN (Replace YOUR_APIFY_TOKEN with your actual API key)

- Headers:

- Content-Type: application/json

- Body:

{ "html": "@{body('HTTP')}", "selector": { "title": "h1", "price": ".price" } }

This will send the HTML from the first HTTP request to Apify, telling it to extract the content of the h1 tag as the title and the content of the element with the class price as the price.

Step 4: Parse the JSON Response

Add a “Parse JSON” action. Set the “Content” field to @{body('HTTP_2')} (assuming the previous HTTP action is named “HTTP_2”). You’ll need to provide a schema. You can generate this by running the flow once and then using the output to automatically generate the schema.

Step 5: Store the Data

Now that we have the title and price, we can store them. For this example, let’s store them in a SharePoint list. Add a “Create item” action and configure it to connect to your SharePoint list. Map the “Title” and “Price” fields in the SharePoint list to the corresponding fields in the parsed JSON output (e.g., @{body('Parse_JSON')?['title']} and @{body('Parse_JSON')?['price']}).

Step 6: Test the Flow

Save and test the flow. If everything is configured correctly, it should scrape the title and price from the product page and store them in your SharePoint list.

Advanced Techniques for Power Automate Web Scraping

While the above example demonstrates a simple web scraping flow, there are several advanced techniques you can use to enhance your web scraping capabilities with Power Automate:

- Pagination Handling: Many websites display data across multiple pages. You can use loops and conditional logic in Power Automate to navigate through these pages and scrape data from each one.

- Dynamic Content Handling: Some websites use JavaScript to load content dynamically. You may need to use UI flows or more advanced HTTP request techniques to handle this type of content.

- Error Handling: Web scraping can be unreliable, as websites can change their structure or become unavailable. You should implement error handling in your flows to gracefully handle these situations.

- Proxy Servers: Some websites may block your IP address if they detect excessive scraping. You can use proxy servers to rotate your IP address and avoid being blocked.

- Rate Limiting: To avoid overloading the website’s server, you should implement rate limiting in your flows. This involves adding delays between requests to ensure that you are not sending too many requests in a short period of time.

Best Practices for Power Automate Web Scraping

To ensure that your Power Automate web scraping projects are successful, it’s important to follow these best practices:

- Respect Website Terms of Service: Before scraping a website, carefully review its terms of service to ensure that web scraping is permitted.

- Be Ethical: Avoid scraping websites that are known to be sensitive or that contain personal information.

- Use a User-Agent: Set a user-agent in your HTTP requests to identify yourself as a web scraper. This allows website administrators to contact you if they have any concerns.

- Monitor Your Flows: Regularly monitor your flows to ensure that they are working correctly and that you are not being blocked by the website.

- Optimize for Performance: Optimize your flows for performance by minimizing the number of HTTP requests and using efficient parsing techniques.

Limitations of Power Automate for Web Scraping

While Power Automate can be a useful tool for web scraping, it’s important to be aware of its limitations:

- Complexity: For complex web scraping scenarios, Power Automate may not be the most suitable tool. In these cases, you may be better off using a dedicated web scraping library or service.

- Maintenance: Websites can change their structure frequently, which can break your web scraping flows. You will need to regularly maintain your flows to ensure that they continue to work correctly.

- Scalability: While Power Automate can handle large volumes of data, it may not be as scalable as dedicated web scraping services.

Alternatives to Power Automate for Web Scraping

If Power Automate web scraping doesn’t quite meet your needs, consider these alternatives:

- Dedicated Web Scraping Tools: Tools like Octoparse, ParseHub, and Scrapy are specifically designed for web scraping and offer more advanced features than Power Automate.

- Programming Languages: Languages like Python with libraries like Beautiful Soup and Scrapy provide more flexibility and control over the web scraping process.

- Cloud-Based Web Scraping Services: Services like Apify and Bright Data offer fully managed web scraping solutions that handle everything from proxy management to data extraction.

Conclusion

Power Automate web scraping offers a powerful and accessible way to automate data extraction from websites. By understanding the basics of web scraping, the capabilities of Power Automate, and the best practices for ethical and efficient scraping, you can leverage this tool to gather valuable data for your business or personal projects. While Power Automate has its limitations, it remains a valuable tool in the arsenal of any data-driven professional.