Puppeteer Web Scraping with GitHub: A Comprehensive Guide

Web scraping has become an essential tool for businesses and researchers alike, enabling them to extract valuable data from the vast expanse of the internet. Among the various web scraping tools available, Puppeteer stands out as a powerful and versatile Node.js library. When combined with the collaborative power of GitHub, Puppeteer web scraping becomes even more efficient and scalable. This guide will delve into the intricacies of Puppeteer web scraping, exploring its capabilities, integration with GitHub, and best practices for successful implementation. We’ll explore using Puppeteer web scraping for diverse applications and highlight the benefits of version control and collaborative development through GitHub.

What is Puppeteer?

Puppeteer is a Node.js library developed by Google that provides a high-level API to control headless Chrome or Chromium instances. In simpler terms, it allows you to programmatically interact with a web browser without a graphical user interface. This makes it ideal for automating tasks such as:

- Web scraping: Extracting data from websites.

- Automated testing: Simulating user interactions to test web applications.

- Generating PDFs: Creating PDF documents from web pages.

- Capturing screenshots: Taking snapshots of web pages.

Puppeteer’s API provides a rich set of methods for navigating web pages, interacting with elements, and extracting data. Its ability to execute JavaScript within the browser context makes it particularly well-suited for scraping dynamic websites that rely heavily on JavaScript.

Why Use Puppeteer for Web Scraping?

Several factors contribute to Puppeteer’s popularity as a web scraping tool:

- JavaScript Rendering: Puppeteer can render JavaScript, allowing you to scrape data from dynamic websites that rely on client-side rendering. This is a significant advantage over traditional web scraping tools that only parse static HTML.

- Headless Operation: Puppeteer operates in headless mode, meaning it doesn’t require a graphical user interface. This makes it lightweight and efficient, allowing you to run scraping tasks on servers without display capabilities.

- Comprehensive API: Puppeteer’s API provides a wide range of methods for interacting with web pages, including navigation, element selection, form submission, and data extraction.

- Developer-Friendly: Puppeteer is written in JavaScript, a language widely used by web developers. This makes it easy for developers to learn and use Puppeteer for web scraping tasks.

- Active Community: Puppeteer has a large and active community, providing ample resources and support for developers.

Integrating Puppeteer with GitHub

GitHub is a web-based platform for version control and collaboration. Integrating Puppeteer web scraping projects with GitHub offers several advantages:

- Version Control: GitHub allows you to track changes to your Puppeteer scripts, making it easy to revert to previous versions if needed.

- Collaboration: GitHub facilitates collaboration among developers, allowing them to share code, contribute to projects, and resolve issues.

- Code Backup: GitHub provides a secure backup of your Puppeteer code, protecting it from data loss.

- Automation: GitHub Actions can be used to automate Puppeteer web scraping tasks, such as running scripts on a schedule or triggering them based on specific events.

To integrate Puppeteer with GitHub, you’ll typically create a Git repository for your project and commit your Puppeteer scripts to the repository. You can then use GitHub’s features to manage your code, collaborate with others, and automate your web scraping tasks.

Setting Up a Puppeteer Web Scraping Project on GitHub

Here’s a step-by-step guide to setting up a Puppeteer web scraping project on GitHub:

- Create a GitHub Repository: Create a new repository on GitHub to store your project’s code.

- Initialize a Node.js Project: Create a new directory for your project and initialize a Node.js project using the command `npm init -y`.

- Install Puppeteer: Install the Puppeteer library using the command `npm install puppeteer`.

- Write Your Puppeteer Script: Create a JavaScript file (e.g., `scraper.js`) and write your Puppeteer web scraping code.

- Commit Your Code to GitHub: Add your files to the Git repository, commit them with a descriptive message, and push them to GitHub.

- Configure GitHub Actions (Optional): If you want to automate your scraping tasks, configure GitHub Actions to run your script on a schedule or based on specific events.

Here’s an example of a basic Puppeteer web scraping script:

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://www.example.com');

const title = await page.$eval('title', el => el.textContent);

console.log('Title:', title);

await browser.close();

})();

This script launches a headless browser, navigates to `https://www.example.com`, extracts the title of the page, logs it to the console, and then closes the browser.

Best Practices for Puppeteer Web Scraping

To ensure successful and ethical Puppeteer web scraping, follow these best practices:

- Respect `robots.txt`: Always check the website’s `robots.txt` file to see which pages are allowed to be scraped.

- Rate Limiting: Implement rate limiting to avoid overloading the website’s server. Send requests at a reasonable pace to prevent your IP address from being blocked.

- User-Agent: Set a realistic user-agent string to identify your scraper. This helps the website administrator understand where the traffic is coming from.

- Error Handling: Implement robust error handling to gracefully handle unexpected errors and prevent your script from crashing.

- Data Validation: Validate the extracted data to ensure its accuracy and consistency.

- Avoid Scraping Sensitive Data: Do not scrape sensitive data such as personal information, financial data, or copyrighted content without permission.

- Use Proxies: Consider using proxies to rotate your IP address and avoid being blocked.

- Monitor Your Scraper: Regularly monitor your scraper to ensure it is running correctly and not causing any issues.

Advanced Puppeteer Web Scraping Techniques

Beyond the basics, Puppeteer offers several advanced techniques for more sophisticated web scraping:

- Handling Dynamic Content: Use `page.waitForSelector()` or `page.waitForFunction()` to wait for specific elements to load before extracting data from dynamic websites.

- Pagination: Implement pagination logic to scrape data from multiple pages.

- Form Submission: Use `page.type()` and `page.click()` to fill out and submit forms.

- Handling Cookies: Use `page.cookies()` to manage cookies.

- Using Headless Mode: While headless mode is efficient, sometimes running in headed mode (with a visible browser) can help with debugging.

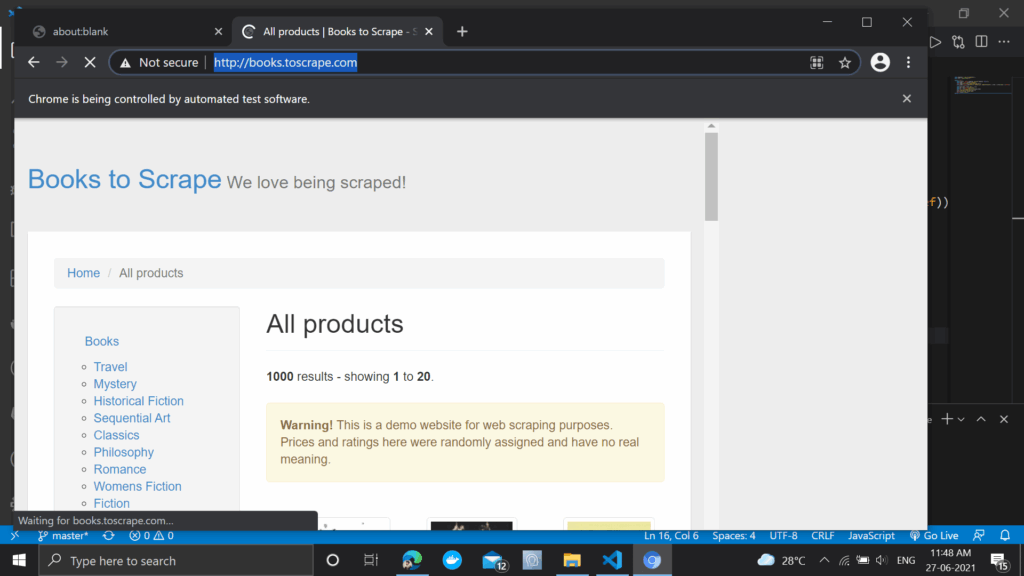

Example: Scraping Product Prices from an E-commerce Site

Let’s illustrate with an example of scraping product prices from an e-commerce site. Note that this is for illustrative purposes, and you should always respect the website’s terms of service and robots.txt.

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://www.example-ecommerce-site.com/products'); // Replace with actual URL

const productPrices = await page.evaluate(() => {

const priceElements = document.querySelectorAll('.product-price'); // Replace with actual CSS selector

const prices = [];

priceElements.forEach(el => {

prices.push(el.textContent.trim());

});

return prices;

});

console.log('Product Prices:', productPrices);

await browser.close();

})();

This script navigates to a product page, extracts the text content of elements with the class `.product-price`, and logs the prices to the console. Remember to replace the placeholder URL and CSS selector with the actual values from the target website.

Troubleshooting Common Puppeteer Web Scraping Issues

While Puppeteer web scraping is powerful, you may encounter some common issues:

- Website Changes: Websites frequently change their structure, which can break your scraper. Regularly monitor your scraper and update it as needed.

- IP Blocking: Websites may block your IP address if they detect excessive scraping. Use proxies or rate limiting to mitigate this.

- CAPTCHAs: Websites may use CAPTCHAs to prevent automated scraping. Consider using a CAPTCHA solving service or implementing CAPTCHA handling logic in your scraper.

- JavaScript Errors: JavaScript errors on the target website can cause Puppeteer to fail. Use `page.on(‘console’, …)` and `page.on(‘pageerror’, …)` to log errors and debug your script.

Alternatives to Puppeteer for Web Scraping

While Puppeteer is a great choice, other tools and libraries can also be used for web scraping, including:

- Cheerio: A fast, flexible, and lean implementation of core jQuery designed specifically for server-side parsing and manipulation of HTML.

- Beautiful Soup (Python): A Python library for pulling data out of HTML and XML files.

- Scrapy (Python): A powerful Python framework for web scraping and web crawling.

- Selenium: A browser automation tool that can be used for web scraping, similar to Puppeteer.

The best choice depends on your specific needs and programming language preferences.

Conclusion

Puppeteer web scraping combined with the collaboration features of GitHub provides a robust and efficient solution for extracting data from the web. By understanding Puppeteer’s capabilities, following best practices, and leveraging GitHub’s version control and automation features, you can build scalable and maintainable web scraping projects. Remember to always respect website terms of service and robots.txt to ensure ethical and responsible data extraction. With careful planning and implementation, Puppeteer web scraping can unlock valuable insights from the vast amount of data available online.

This comprehensive guide has provided a solid foundation for getting started with Puppeteer web scraping and GitHub. As you delve deeper into this topic, explore the Puppeteer API documentation and experiment with different techniques to find the best approach for your specific needs. Happy scraping!

[See also: Web Scraping Best Practices]

[See also: Automating Tasks with Puppeteer]

[See also: Introduction to GitHub Actions]