Unlock the Power of Data: A Deep Dive into Browser-Based Web Scrapers

In today’s data-driven world, the ability to extract information from the web is paramount for businesses and researchers alike. One of the most accessible and versatile methods for achieving this is through the use of browser-based web scrapers. These tools empower users to automate the process of collecting data from websites, offering a convenient alternative to more complex programming-based solutions. This article will provide a comprehensive overview of browser-based web scrapers, covering their functionality, advantages, limitations, and best practices. We will explore how these tools can be leveraged to unlock valuable insights and drive informed decision-making, and also discuss their ethical use and potential pitfalls. By the end of this guide, you’ll have a solid understanding of how browser-based web scrapers can benefit your data extraction endeavors.

What is a Browser-Based Web Scraper?

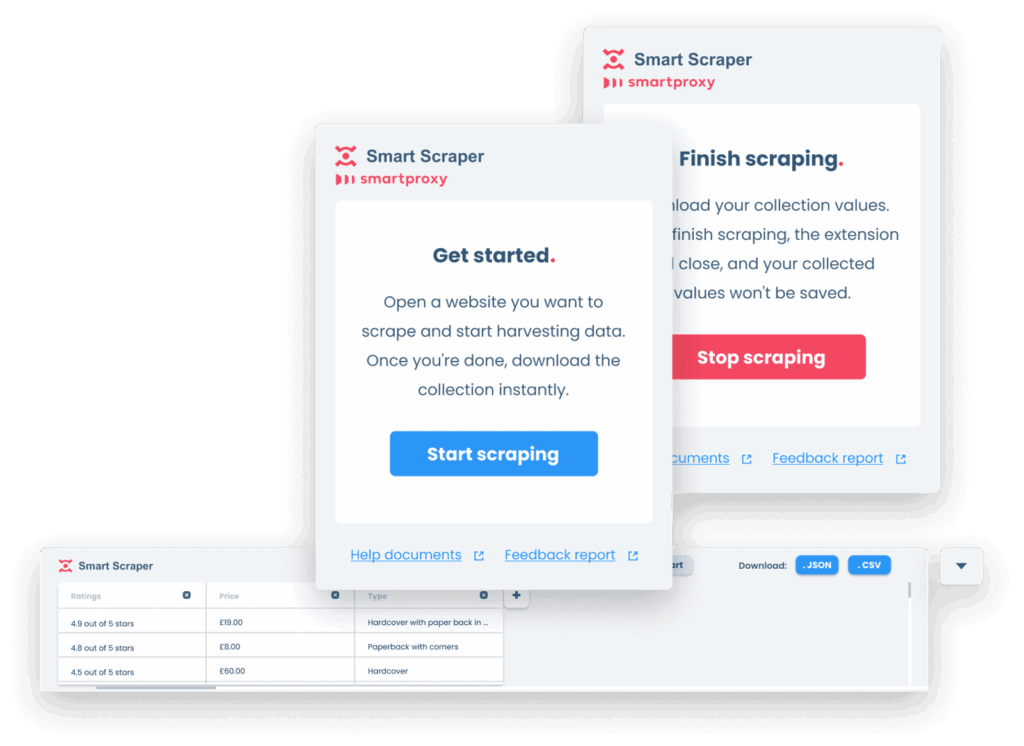

A browser-based web scraper is a software tool or extension that operates within a web browser (such as Chrome, Firefox, or Safari) to extract data from websites. Unlike traditional web scraping methods that involve writing code to send HTTP requests and parse HTML, browser-based web scrapers typically offer a user-friendly interface, often with point-and-click functionality, to select and extract the desired information. This makes them accessible to users without extensive programming knowledge.

These scrapers work by simulating human browsing behavior. They load a website, navigate through its pages, and identify specific elements (text, images, links, etc.) based on user-defined rules or selectors. The extracted data can then be exported in various formats, such as CSV, JSON, or Excel, for further analysis.

Advantages of Using Browser-Based Web Scrapers

Browser-based web scrapers offer several advantages over traditional coding-based methods:

- Ease of Use: The intuitive interface and point-and-click functionality make them accessible to users with limited or no programming skills.

- No Coding Required: Users can create scraping workflows without writing a single line of code.

- Dynamic Content Handling: Browser-based web scrapers can handle websites that rely heavily on JavaScript to load content, as they execute the JavaScript code within the browser environment. This is a significant advantage over traditional scrapers that may struggle with dynamically generated content.

- Bypass Anti-Scraping Measures: Some browser-based web scrapers can mimic human browsing behavior more effectively than traditional scrapers, making it harder for websites to detect and block them.

- Cost-Effective: Many browser-based web scrapers are available as free browser extensions or offer free tiers for basic usage, making them an affordable option for individuals and small businesses.

Limitations of Browser-Based Web Scrapers

While browser-based web scrapers offer numerous benefits, it’s important to be aware of their limitations:

- Scalability: Browser-based web scrapers are generally less scalable than traditional scrapers. Running multiple instances of a browser to scrape large amounts of data can be resource-intensive and slow.

- Performance: Scraping with a browser can be slower than using code-based scrapers, as the browser needs to load and render the entire webpage.

- Maintenance: Websites frequently change their structure, which can break existing scraping workflows. Users need to regularly update their scraping rules to adapt to these changes.

- Limited Functionality: Browser-based web scrapers may not offer the same level of customization and control as code-based scrapers.

- Dependence on Browser: The scraper’s functionality is tied to the browser it runs on. Updates to the browser or changes in its behavior can affect the scraper’s performance.

Popular Browser-Based Web Scrapers

Several browser-based web scrapers are available, each with its own features and capabilities. Here are a few popular options:

- Web Scraper: A popular Chrome extension that allows users to create scraping workflows using a point-and-click interface. It supports various data extraction tasks, including extracting text, images, and links.

- ParseHub: A desktop application that offers a visual interface for building web scraping projects. It supports advanced features such as pagination handling and data cleaning.

- Apify: A cloud-based platform that provides a range of web scraping tools and APIs, including a browser-based web scraper. It offers scalability and flexibility for handling large-scale data extraction tasks.

- Data Miner: A Chrome extension that allows users to extract data from tables and lists on websites. It offers a simple and intuitive interface for selecting and extracting data.

Ethical Considerations and Best Practices

Web scraping, including the use of browser-based web scrapers, should be conducted ethically and responsibly. It’s important to respect website owners’ terms of service and avoid overloading their servers with excessive requests. Here are some best practices to follow:

- Respect Robots.txt: Check the website’s robots.txt file to see which parts of the site are disallowed for scraping.

- Limit Request Rate: Avoid sending too many requests in a short period of time. Implement delays between requests to avoid overloading the server.

- Identify Yourself: Include a User-Agent header in your requests to identify yourself as a web scraper.

- Use Data Responsibly: Use the extracted data in a responsible and ethical manner. Avoid using it for illegal or unethical purposes.

- Comply with Terms of Service: Always adhere to the website’s terms of service. If scraping is explicitly prohibited, refrain from doing so.

Use Cases for Browser-Based Web Scrapers

Browser-based web scrapers can be used in a variety of applications, including:

- E-commerce Price Monitoring: Track prices of products on different e-commerce websites to identify the best deals.

- Market Research: Collect data on market trends, competitor pricing, and customer reviews.

- Lead Generation: Extract contact information from websites to generate leads for sales and marketing.

- Data Aggregation: Collect data from multiple sources and consolidate it into a single database.

- News Monitoring: Track news articles and social media mentions related to specific topics.

How to Choose the Right Browser-Based Web Scraper

Selecting the right browser-based web scraper depends on your specific needs and technical expertise. Consider the following factors when making your decision:

- Ease of Use: Choose a scraper with an intuitive interface that is easy to learn and use.

- Features: Consider the features offered by the scraper, such as pagination handling, data cleaning, and data export options.

- Scalability: If you need to scrape large amounts of data, choose a scraper that can handle the volume.

- Price: Compare the pricing plans of different scrapers and choose one that fits your budget.

- Customer Support: Check the quality of customer support offered by the scraper provider.

Advanced Techniques for Browser-Based Web Scraping

While browser-based web scrapers are designed to be user-friendly, some advanced techniques can help you improve your scraping efficiency and accuracy:

- Using CSS Selectors: Learn how to use CSS selectors to target specific elements on a webpage. [See also: CSS Selector Fundamentals for Web Scraping]

- Handling Pagination: Implement techniques for navigating through multiple pages of a website.

- Dealing with AJAX: Learn how to handle websites that load content dynamically using AJAX.

- Rotating User Agents: Rotate user agents to avoid being blocked by websites.

- Using Proxies: Use proxies to mask your IP address and avoid being blocked.

The Future of Browser-Based Web Scraping

Browser-based web scraping is constantly evolving, with new tools and techniques emerging regularly. The future of browser-based web scraping is likely to be shaped by advancements in artificial intelligence and machine learning, which will enable scrapers to automatically adapt to website changes and extract data with greater accuracy. Furthermore, we can expect to see increased integration with cloud platforms and APIs, making it easier to build and deploy large-scale web scraping projects. The evolution of anti-scraping technologies will continue to drive innovation in scraping techniques, leading to more sophisticated and resilient browser-based web scrapers.

Conclusion

Browser-based web scrapers are powerful tools that can be used to extract valuable data from the web. They offer a convenient and accessible alternative to traditional coding-based methods, making them suitable for users with varying levels of technical expertise. By understanding the advantages, limitations, and best practices of browser-based web scraping, you can leverage these tools to unlock valuable insights and drive informed decision-making. Remember to always scrape ethically and responsibly, respecting website owners’ terms of service and avoiding overloading their servers.