Unlocking Data’s Potential: A Deep Dive into Screen Scraping Tools

In today’s data-driven world, accessing and extracting information efficiently is paramount. While APIs provide structured data access, many websites don’t offer them, leaving valuable data locked behind HTML code. This is where screen scraping tools come into play. These tools enable users to automatically extract data from websites, transforming unstructured HTML into structured data that can be analyzed, integrated, and utilized for various purposes. This article will explore the world of screen scraping tools, examining their functionalities, applications, and best practices.

What are Screen Scraping Tools?

Screen scraping tools, also known as web scraping tools, are software applications designed to extract data from websites. They work by simulating human browsing behavior, navigating web pages, identifying specific data elements, and extracting them into a structured format, such as CSV, JSON, or Excel. These tools can automate the process of copying and pasting information from websites, saving time and effort while providing access to data that would otherwise be difficult or impossible to obtain.

How Screen Scraping Works

The process of screen scraping typically involves the following steps:

- Requesting the Web Page: The tool sends an HTTP request to the target website, similar to how a web browser requests a page.

- Parsing the HTML: Once the HTML content is received, the tool parses it to understand the structure and identify the elements containing the desired data.

- Locating Data Elements: Using techniques like CSS selectors, XPath, or regular expressions, the tool pinpoints the specific data elements to be extracted.

- Extracting the Data: The tool extracts the data from the identified elements.

- Structuring the Data: The extracted data is then structured into a desired format, such as a table, list, or JSON object.

- Saving the Data: Finally, the structured data is saved to a file or database for further analysis or use.

Types of Screen Scraping Tools

Screen scraping tools come in various forms, each with its own strengths and weaknesses. Here are some common types:

Browser Extensions

Browser extensions are lightweight tools that integrate directly into web browsers like Chrome or Firefox. They are often easy to use and suitable for simple screen scraping tasks. Examples include Web Scraper and Data Miner.

Desktop Software

Desktop software offers more advanced features and capabilities compared to browser extensions. They typically provide a graphical user interface (GUI) for designing scraping workflows and handling more complex scenarios. Examples include Octoparse and ParseHub.

Cloud-Based Scraping Platforms

Cloud-based platforms offer scalable and reliable screen scraping solutions. They handle the infrastructure and maintenance, allowing users to focus on defining the scraping logic. Examples include Apify and Scrapinghub.

Programming Libraries

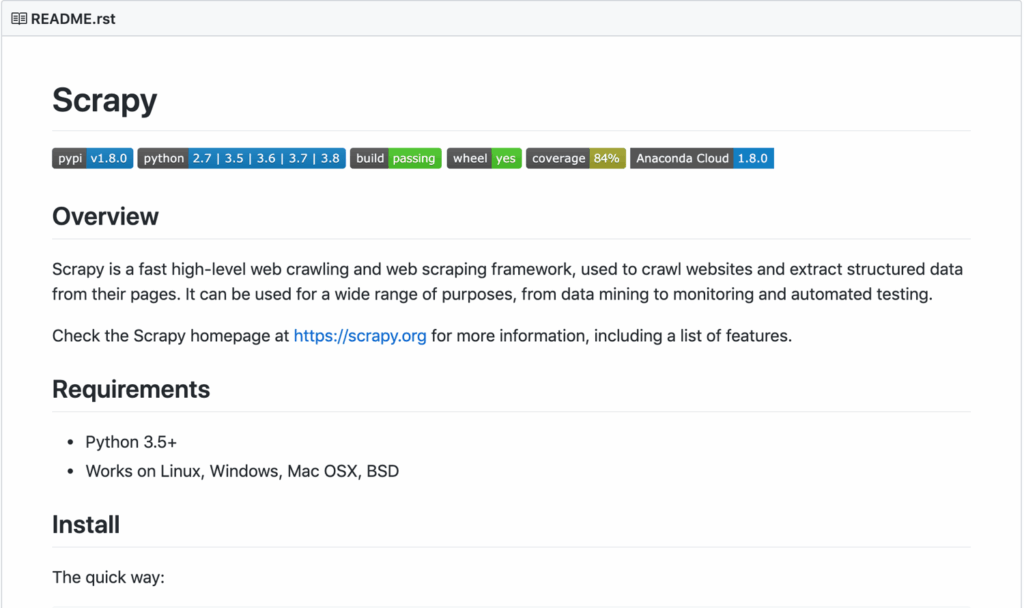

Programming libraries, such as Beautiful Soup and Scrapy in Python, provide developers with the flexibility to build custom screen scraping solutions. These libraries require programming knowledge but offer the most control and customization options.

Applications of Screen Scraping Tools

Screen scraping tools have a wide range of applications across various industries:

E-commerce

Screen scraping is used to monitor competitor pricing, track product availability, and gather customer reviews. This information helps businesses make informed decisions about pricing strategies, inventory management, and product development.

Finance

In the finance industry, screen scraping is used to collect financial data, such as stock prices, exchange rates, and news articles. This data is used for market analysis, investment decisions, and risk management.

Marketing

Marketers use screen scraping to gather leads, monitor brand mentions, and analyze social media trends. This information helps them understand customer behavior, optimize marketing campaigns, and improve brand reputation.

Real Estate

Screen scraping is used to collect property listings, track market trends, and analyze real estate data. This information helps real estate agents, investors, and developers make informed decisions about buying, selling, and developing properties.

Research

Researchers use screen scraping to gather data for academic studies, market research, and scientific investigations. This data can be used to analyze trends, identify patterns, and draw conclusions.

Benefits of Using Screen Scraping Tools

Using screen scraping tools offers several benefits:

- Automation: Automates the process of data extraction, saving time and effort.

- Efficiency: Extracts large amounts of data quickly and accurately.

- Accessibility: Provides access to data that is not available through APIs.

- Cost-effectiveness: Reduces the need for manual data entry and research.

- Flexibility: Can be used to extract data from various websites and in different formats.

Ethical Considerations and Legal Issues

While screen scraping can be a powerful tool, it’s important to consider the ethical and legal implications. Some websites prohibit screen scraping in their terms of service, and violating these terms can lead to legal consequences. It’s also important to avoid overwhelming websites with requests, as this can cause performance issues or even denial of service. Respecting robots.txt files, which specify which parts of a website should not be scraped, is crucial. [See also: Understanding Website Terms of Service]

Best Practices for Screen Scraping

To ensure successful and ethical screen scraping, follow these best practices:

- Respect robots.txt: Always check the robots.txt file to see which parts of the website should not be scraped.

- Limit Request Rate: Avoid overwhelming the website with requests by limiting the request rate. Implement delays between requests to mimic human browsing behavior.

- Use User Agents: Use a descriptive user agent to identify your scraper. This allows website administrators to identify and potentially block your scraper if necessary.

- Handle Errors Gracefully: Implement error handling to gracefully handle unexpected situations, such as website downtime or changes in HTML structure.

- Store Data Responsibly: Store the extracted data securely and comply with data privacy regulations.

- Monitor Performance: Monitor the performance of your scraper to ensure it is running efficiently and not causing any issues for the target website.

Choosing the Right Screen Scraping Tool

Selecting the right screen scraping tool depends on your specific needs and technical expertise. Consider the following factors when making your decision:

- Complexity of the Task: For simple tasks, browser extensions or desktop software may suffice. For more complex tasks, cloud-based platforms or programming libraries may be necessary.

- Scalability Requirements: If you need to scrape large amounts of data, cloud-based platforms are typically the best option due to their scalability.

- Technical Expertise: Programming libraries require programming knowledge, while browser extensions and desktop software are generally easier to use for non-technical users.

- Cost: Screen scraping tools range in price from free to thousands of dollars per month. Consider your budget when making your decision.

- Features: Look for tools that offer the features you need, such as data structuring, scheduling, and proxy support.

Examples of Screen Scraping Tools

Here are some popular screen scraping tools available:

- Octoparse: A desktop-based tool with a user-friendly interface and powerful features.

- ParseHub: A desktop and cloud-based tool that offers both free and paid plans.

- Apify: A cloud-based platform that provides a wide range of scraping tools and services.

- Scrapinghub: A cloud-based platform that offers a comprehensive suite of scraping tools and services.

- Beautiful Soup (Python): A Python library for parsing HTML and XML.

- Scrapy (Python): A Python framework for building web scrapers.

- Web Scraper (Chrome Extension): A free Chrome extension for simple screen scraping tasks.

Future of Screen Scraping

The future of screen scraping is likely to be shaped by advancements in artificial intelligence (AI) and machine learning (ML). AI-powered screen scraping tools will be able to automatically adapt to changes in website structure and extract data more accurately. ML algorithms can be used to identify and extract specific data elements without requiring manual configuration. As websites become more dynamic and complex, AI and ML will play an increasingly important role in screen scraping. [See also: The Impact of AI on Data Extraction]

Conclusion

Screen scraping tools are essential for extracting data from websites that lack APIs. By understanding the different types of tools, their applications, and best practices, you can leverage screen scraping to unlock valuable data and gain a competitive advantage. However, it’s crucial to be mindful of ethical and legal considerations and to use screen scraping tools responsibly. Whether you’re monitoring competitor pricing, gathering leads, or conducting research, screen scraping can be a powerful tool for unlocking data’s potential.